09. PyTorch Model Deployment¶

Welcome to Milestone Project 3: PyTorch Model Deployment!

We've come a long way with our FoodVision Mini project.

But so far our PyTorch models have only been accessible to us.

How about we bring FoodVision Mini to life and make it publically accessible?

In other words, we're going to deploy our FoodVision Mini model to the internet as a usable app!

Trying out the deployed version of FoodVision Mini (what we're going to build) on my lunch. The model got it right too 🍣!

What is machine learning model deployment?¶

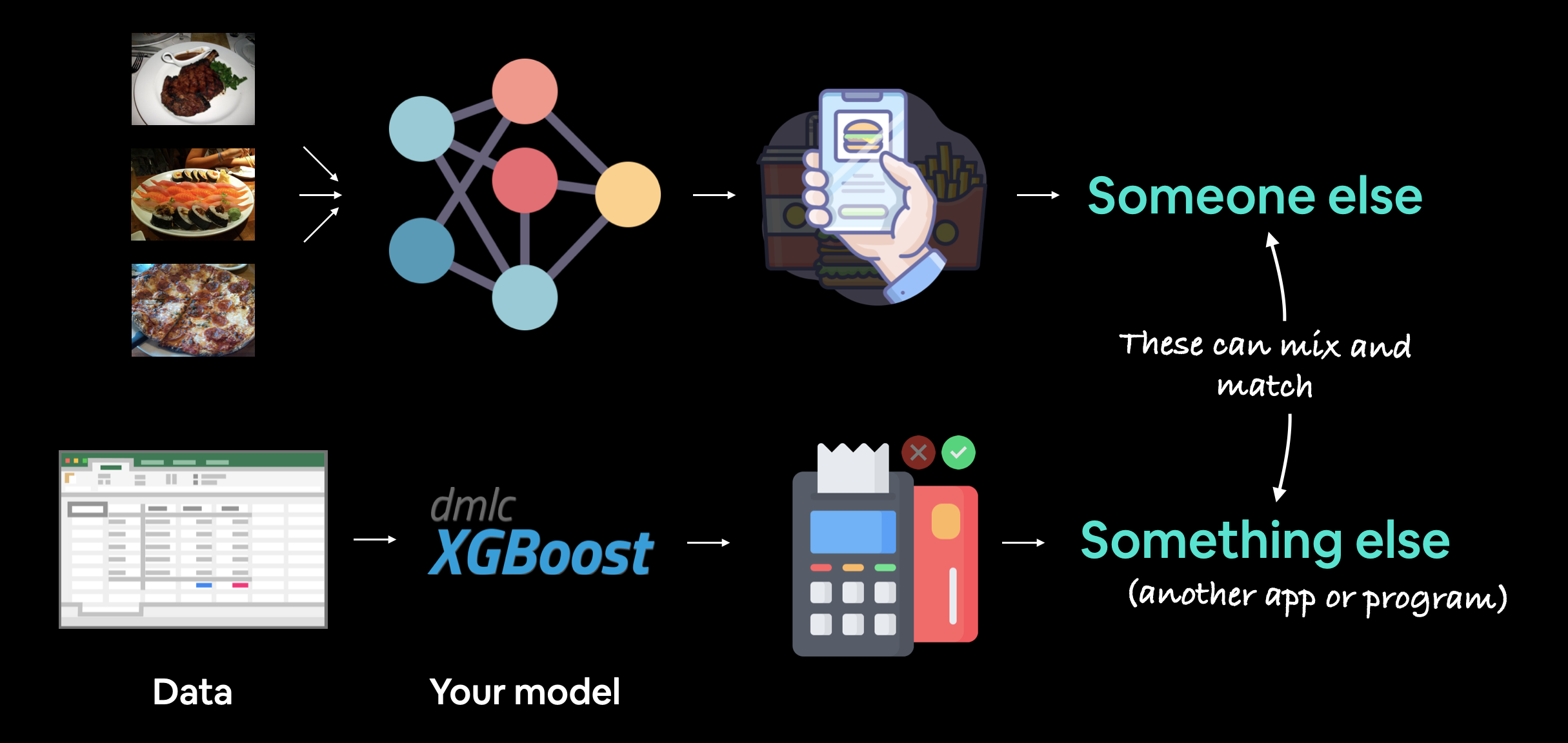

Machine learning model deployment is the process of making your machine learning model accessible to someone or something else.

Someone else being a person who can interact with your model in some way.

For example, someone taking a photo on their smartphone of food and then having our FoodVision Mini model classify it into pizza, steak or sushi.

Something else might be another program, app or even another model that interacts with your machine learning model(s).

For example, a banking database might rely on a machine learning model making predictions as to whether a transaction is fraudulent or not before transferring funds.

Or an operating system may lower its resource consumption based on a machine learning model making predictions on how much power someone generally uses at specific times of day.

These use cases can be mixed and matched as well.

For example, a Tesla car's computer vision system will interact with the car's route planning program (something else) and then the route planning program will get inputs and feedback from the driver (someone else).

Machine learning model deployment involves making your model available to someone or something else. For example, someone might use your model as part of a food recognition app (such as FoodVision Mini or Nutrify). And something else might be another model or program using your model such as a banking system using a machine learning model to detect if a transaction is fraud or not.

Why deploy a machine learning model?¶

One of the most important philosophical questions in machine learning is:

Deploying a model is as important as training one.

Because although you can get a pretty good idea of how your model's going to function by evaluting it on a well crafted test set or visualizing its results, you never really know how it's going to perform until you release it to the wild.

Having people who've never used your model interact with it will often reveal edge cases you never thought of during training.

For example, what happens if someone was to upload a photo that wasn't of food to our FoodVision Mini model?

One solution would be to create another model that first classifies images as "food" or "not food" and passing the target image through that model first (this is what Nutrify does).

Then if the image is of "food" it goes to our FoodVision Mini model and gets classified into pizza, steak or sushi.

And if it's "not food", a message is displayed.

But what if these predictions were wrong?

What happens then?

You can see how these questions could keep going.

Thus this highlights the importance of model deployment: it helps you figure out errors in your model that aren't obvious during training/testing.

We covered a PyTorch workflow back in 01. PyTorch Workflow. But once you've got a good model, deployment is a good next step. Monitoring involves seeing how your model goes on the most important data split: data from the real world. For more resources on deployment and monitoring see PyTorch Extra Resources.

Different types of machine learning model deployment¶

Whole books could be written on the different types of machine learning model deployment (and many good ones are listed in PyTorch Extra Resources).

And the field is still developing in terms of best practices.

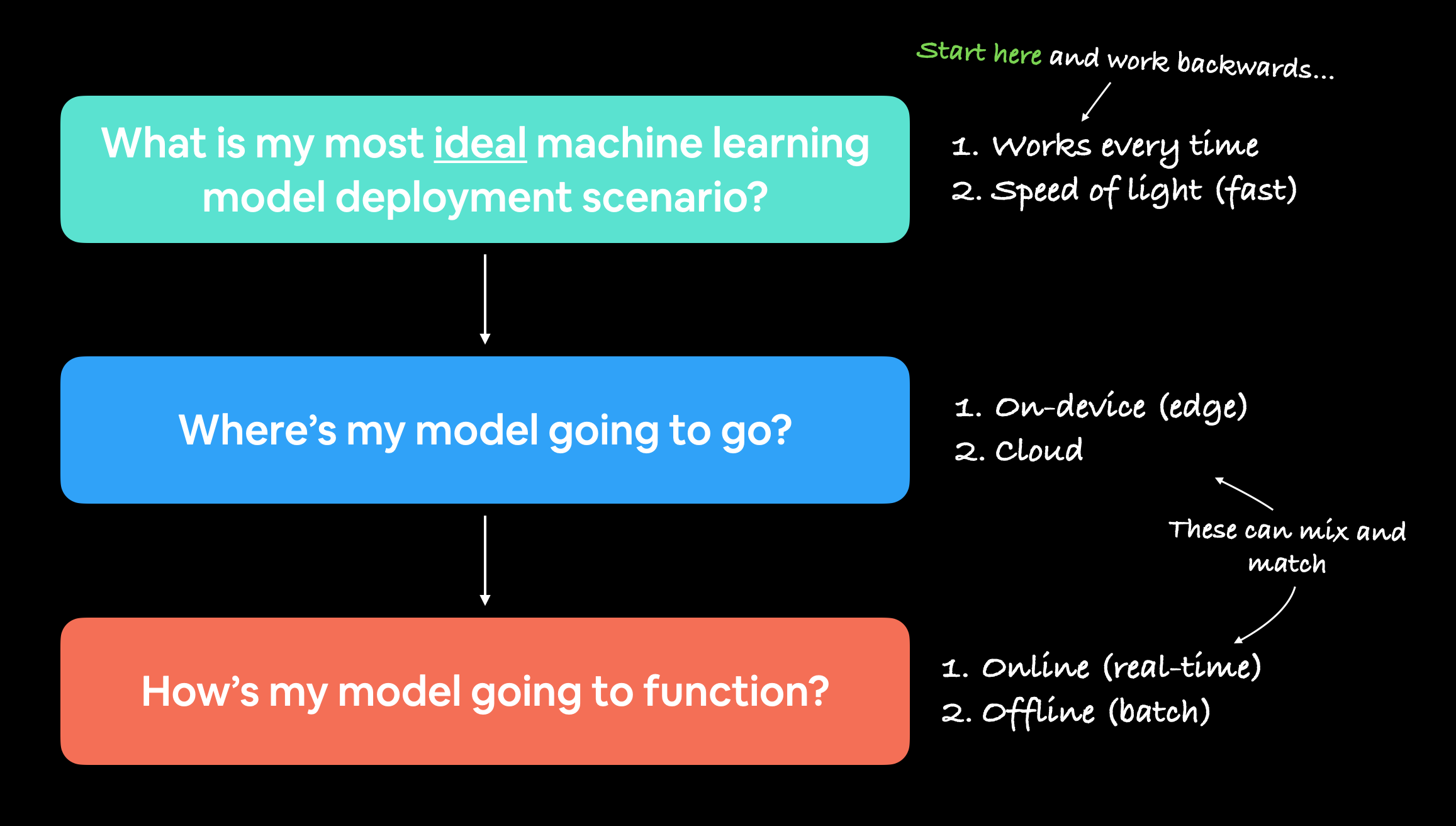

But I like to start with the question:

"What is the most ideal scenario for my machine learning model to be used?"

And then work backwards from there.

Of course, you may not know this ahead of time. But you're smart enough to imagine such things.

In the case of FoodVision Mini, our ideal scenario might be:

- Someone takes a photo on a mobile device (through an app or web broswer).

- The prediction comes back fast.

Easy.

So we've got two main criteria:

- The model should work on a mobile device (this means there will be some compute constraints).

- The model should make predictions fast (because a slow app is a boring app).

And of course, depending on your use case, your requirements may vary.

You may notice the above two points break down into another two questions:

- Where's it going to go? - As in, where is it going to be stored?

- How's it going to function? - As in, does it return predictions immediately? Or do they come later?

When starting to deploy machine learning models, it's helpful to start by asking what's the most ideal use case and then work backwards from there, asking where the model's going to go and then how it's going to function.

Where's it going to go?¶

When you deploy your machine learning model, where does it live?

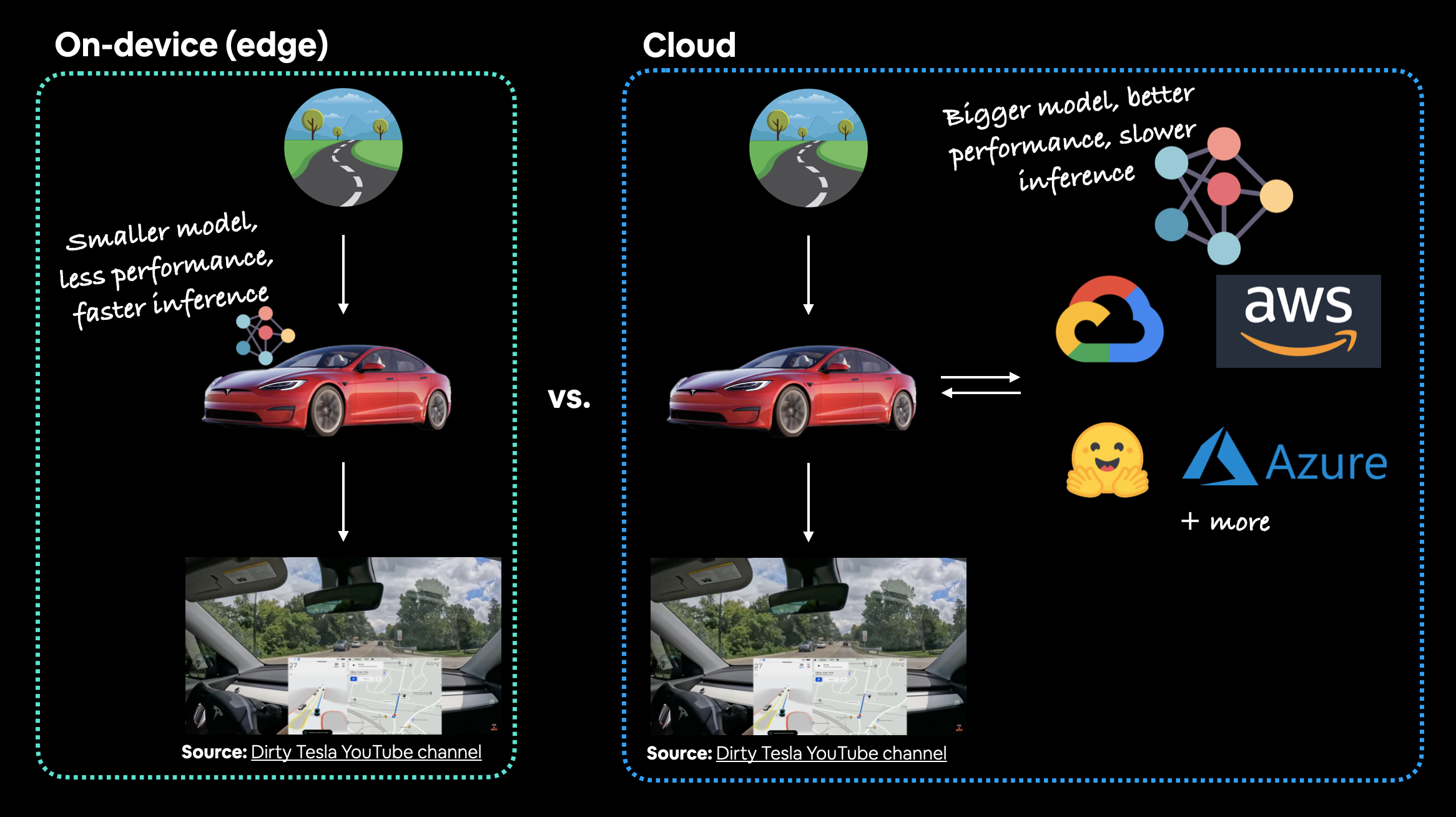

The main debate here is usually on-device (also called edge/in the browser) or on the cloud (a computer/server that isn't the actual device someone/something calls the model from).

Both have their pros and cons.

| Deployment location | Pros | Cons |

|---|---|---|

| On-device (edge/in the browser) | Can be very fast (since no data leaves the device) | Limited compute power (larger models take longer to run) |

| Privacy preserving (again no data has to leave the device) | Limited storage space (smaller model size required) | |

| No internet connection required (sometimes) | Device-specific skills often required | |

| On cloud | Near unlimited compute power (can scale up when needed) | Costs can get out of hand (if proper scaling limits aren't enforced) |

| Can deploy one model and use everywhere (via API) | Predictions can be slower due to data having to leave device and predictions having to come back (network latency) | |

| Links into existing cloud ecosystem | Data has to leave device (this may cause privacy concerns) |

There are more details to these but I've left resources in the extra-curriculum to learn more.

Let's give an example.

If we're deploying FoodVision Mini as an app, we want it to perform well and fast.

So which model would we prefer?

- A model on-device that performs at 95% accuracy with an inference time (latency) of one second per prediction.

- A model on the cloud that performs at 98% accuracy with an inference time of 10 seconds per per prediction (bigger, better model but takes longer to compute).

I've made these numbers up but they showcase a potential difference between on-device and on the cloud.

Option 1 could potentially be a smaller less performant model that runs fast because its able to fit on a mobile device.

Option 2 could potentially a larger more performant model that requires more compute and storage but it takes a bit longer to run because we have to send data off the device and get it back (so even though the actual prediction might be fast, the network time and data transfer has to factored in).

For FoodVision Mini, we'd likely prefer option 1, because the small hit in performance is far outweighed by the faster inference speed.

In the case of a Tesla car's computer vision system, which would be better? A smaller model that performs well on device (model is on the car) or a larger model that performs better that's on the cloud? In this case, you'd much prefer the model being on the car. The extra network time it would take for data to go from the car to the cloud and then back to the car just wouldn't be worth it (or potentially even impossible with poor signal areas).

Note: For a full example of seeing what it's like to deploy a PyTorch model to an edge device, see the PyTorch tutorial on achieving real-time inference (30fps+) with a computer vision model on a Raspberry Pi.

How's it going to function?¶

Back to the ideal use case, when you deploy your machine learning model, how should it work?

As in, would you like predictions returned immediately?

Or is it okay for them to happen later?

These two scenarios are generally referred to as:

- Online (real-time) - Predictions/inference happen immediately. For example, someone uploads an image, the image gets transformed and predictions are returned or someone makes a purchase and the transaction is verified to be non-fraudulent by a model so the purchase can go through.

- Offline (batch) - Predictions/inference happen periodically. For example, a photos application sorts your images into different categories (such as beach, mealtime, family, friends) whilst your mobile device is plugged into charge.

Note: "Batch" refers to inference being performed on multiple samples at a time. However, to add a little confusion, batch processing can happen immediately/online (multiple images being classified at once) and/or offline (multiple images being predicted/trained on at once).

The main difference between each being: predictions being made immediately or periodically.

Periodically can have a varying timescale too, from every few seconds to every few hours or days.

And you can mix and match the two.

In the case of FoodVision Mini, we'd want our inference pipeline to happen online (real-time), so when someone uploads an image of pizza, steak or sushi, the prediction results are returned immediately (any slower than real-time would make a boring experience).

But for our training pipeline, it's okay for it to happen in a batch (offline) fashion, which is what we've been doing throughout the previous chapters.

Ways to deploy a machine learning model¶

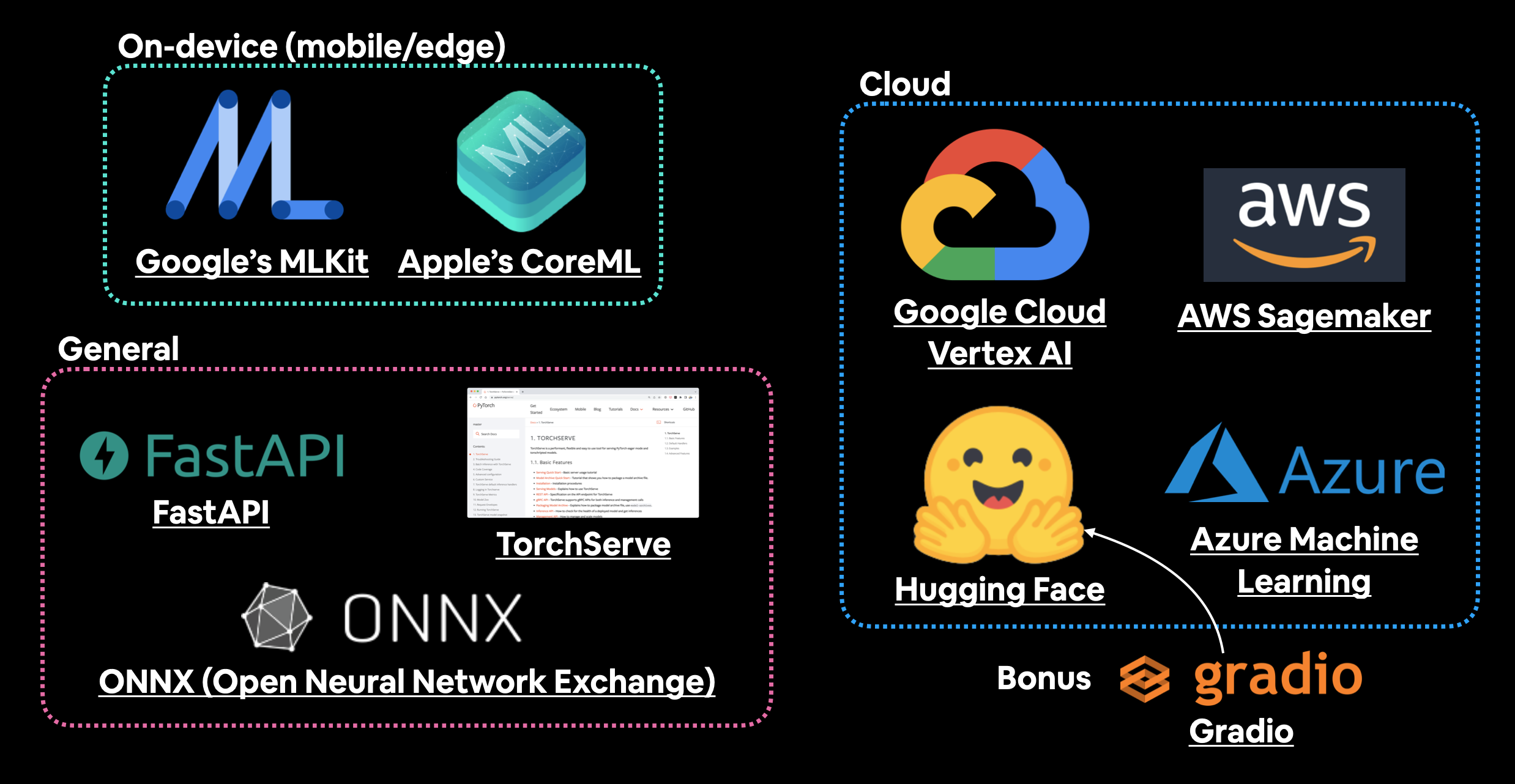

We've discussed a couple of options for deploying machine learning models (on-device and cloud).

And each of these will have their specific requirements:

| Tool/resource | Deployment type |

|---|---|

| Google's ML Kit | On-device (Android and iOS) |

Apple's Core ML and coremltools Python package |

On-device (all Apple devices) |

| Amazon Web Service's (AWS) Sagemaker | Cloud |

| Google Cloud's Vertex AI | Cloud |

| Microsoft's Azure Machine Learning | Cloud |

| Hugging Face Spaces | Cloud |

| API with FastAPI | Cloud/self-hosted server |

| API with TorchServe | Cloud/self-hosted server |

| ONNX (Open Neural Network Exchange) | Many/general |

| Many more... |

Note: An application programming interface (API) is a way for two (or more) computer programs to interact with each other. For example, if your model was deployed as API, you would be able to write a program that could send data to it and then receive predictions back.

Which option you choose will be highly dependent on what you're building/who you're working with.

But with so many options, it can be very intimidating.

So best to start small and keep it simple.

And one of the best ways to do so is by turning your machine learning model into a demo app with Gradio and then deploying it on Hugging Face Spaces.

We'll be doing just that with FoodVision Mini later on.

A handful of places and tools to host and deploy machine learning models. There are plenty I've missed so if you'd like to add more, please leave a discussion on GitHub.

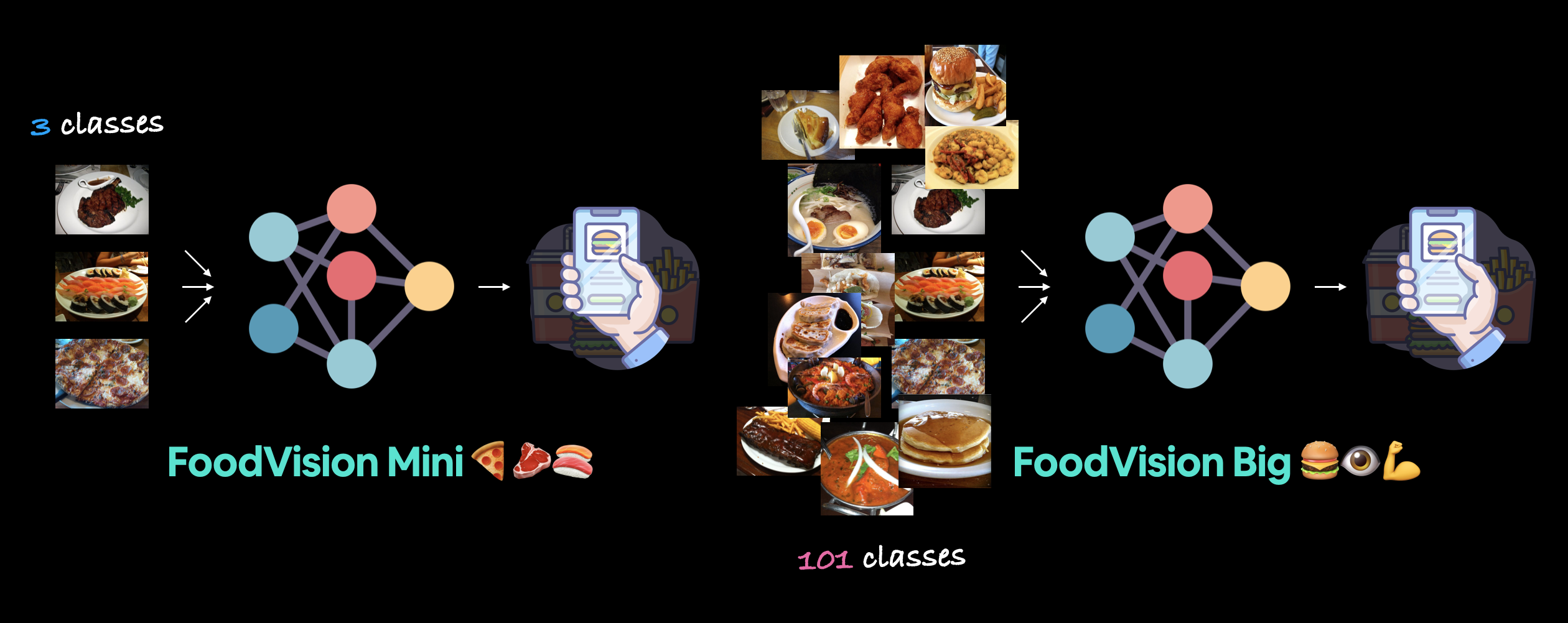

What we're going to cover¶

Enough talking about deploying a machine learning model.

Let's become machine learning engineers and actually deploy one.

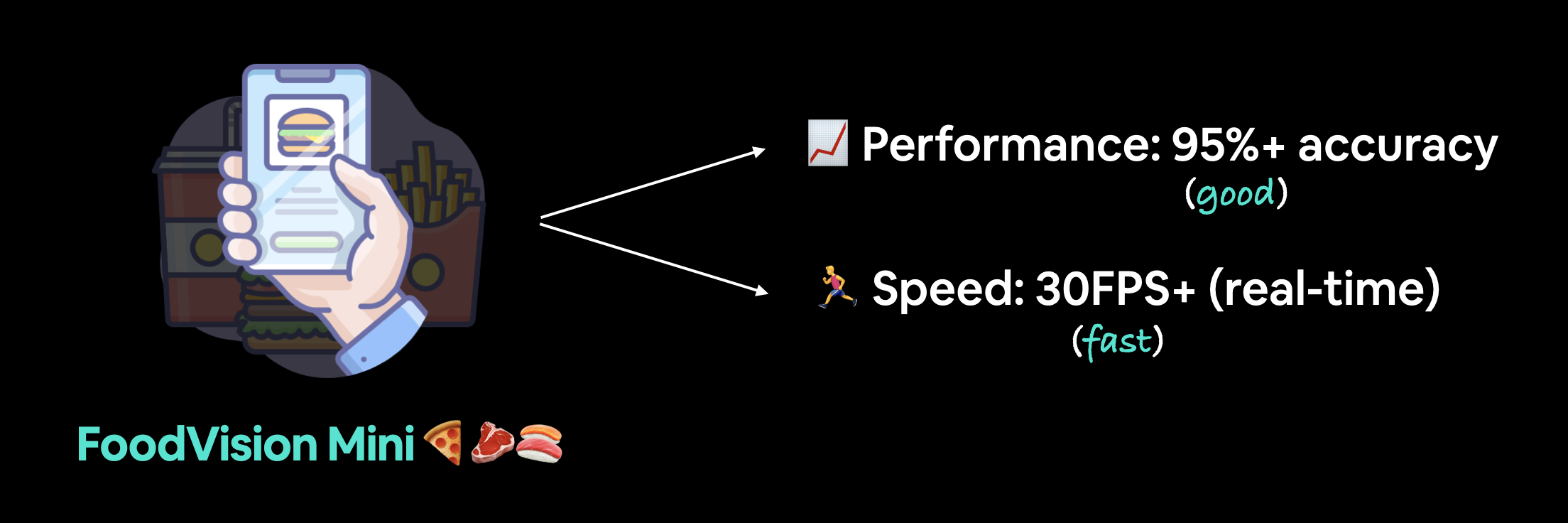

Our goal is to deploy our FoodVision Model via a demo Gradio app with the following metrics:

- Performance: 95%+ accuracy.

- Speed: real-time inference of 30FPS+ (each prediction has a latency of lower than ~0.03s).

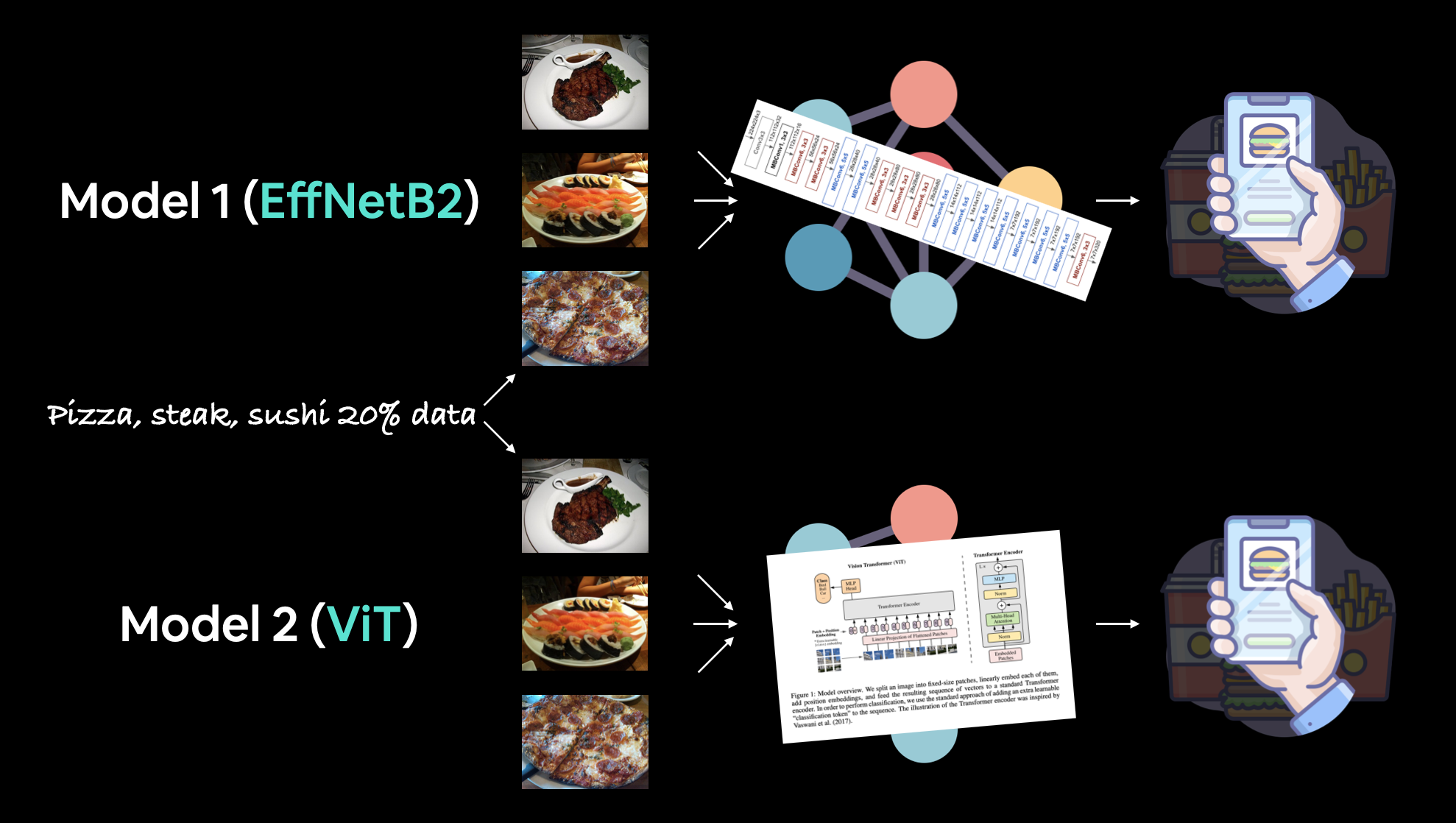

We'll start by running an experiment to compare our best two models so far: EffNetB2 and ViT feature extractors.

Then we'll deploy the one which performs closest to our goal metrics.

Finally, we'll finish with a (BIG) surprise bonus.

| Topic | Contents |

|---|---|

| 0. Getting setup | We've written a fair bit of useful code over the past few sections, let's download it and make sure we can use it again. |

| 1. Get data | Let's download the pizza_steak_sushi_20_percent.zip dataset so we can train our previously best performing models on the same dataset. |

| 2. FoodVision Mini model deployment experiment outline | Even on the third milestone project, we're still going to be running multiple experiments to see which model (EffNetB2 or ViT) achieves closest to our goal metrics. |

| 3. Creating an EffNetB2 feature extractor | An EfficientNetB2 feature extractor performed the best on our pizza, steak, sushi dataset in 07. PyTorch Experiment Tracking, let's recreate it as a candidate for deployment. |

| 4. Creating a ViT feature extractor | A ViT feature extractor has been the best performing model yet on our pizza, steak, sushi dataset in 08. PyTorch Paper Replicating, let's recreate it as a candidate for deployment alongside EffNetB2. |

| 5. Making predictions with our trained models and timing them | We've built two of the best performing models yet, let's make predictions with them and track their results. |

| 6. Comparing model results, prediction times and size | Let's compare our models to see which performs best with our goals. |

| 7. Bringing FoodVision Mini to life by creating a Gradio demo | One of our models performs better than the other (in terms of our goals), so let's turn it into a working app demo! |

| 8. Turning our FoodVision Mini Gradio demo into a deployable app | Our Gradio app demo works locally, let's prepare it for deployment! |

| 9. Deploying our Gradio demo to HuggingFace Spaces | Let's take FoodVision Mini to the web and make it pubically accessible for all! |

| 10. Creating a BIG surprise | We've built FoodVision Mini, time to step things up a notch. |

| 11. Deploying our BIG surprise | Deploying one app was fun, how about we make it two? |

Where can you get help?¶

All of the materials for this course are available on GitHub.

If you run into trouble, you can ask a question on the course GitHub Discussions page.

And of course, there's the PyTorch documentation and PyTorch developer forums, a very helpful place for all things PyTorch.

0. Getting setup¶

As we've done previously, let's make sure we've got all of the modules we'll need for this section.

We'll import the Python scripts (such as data_setup.py and engine.py) we created in 05. PyTorch Going Modular.

To do so, we'll download going_modular directory from the pytorch-deep-learning repository (if we don't already have it).

We'll also get the torchinfo package if it's not available.

torchinfo will help later on to give us a visual representation of our model.

And since later on we'll be using torchvision v0.13 package (available as of July 2022), we'll make sure we've got the latest versions.

Note: If you're using Google Colab, and you don't have a GPU turned on yet, it's now time to turn one on via

Runtime -> Change runtime type -> Hardware accelerator -> GPU.

# For this notebook to run with updated APIs, we need torch 1.12+ and torchvision 0.13+

try:

import torch

import torchvision

assert int(torch.__version__.split(".")[1]) >= 12, "torch version should be 1.12+"

assert int(torchvision.__version__.split(".")[1]) >= 13, "torchvision version should be 0.13+"

print(f"torch version: {torch.__version__}")

print(f"torchvision version: {torchvision.__version__}")

except:

print(f"[INFO] torch/torchvision versions not as required, installing nightly versions.")

!pip3 install -U torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu113

import torch

import torchvision

print(f"torch version: {torch.__version__}")

print(f"torchvision version: {torchvision.__version__}")

torch version: 1.13.0.dev20220824+cu113 torchvision version: 0.14.0.dev20220824+cu113

Note: If you're using Google Colab and the cell above starts to install various software packages, you may have to restart your runtime after running the above cell. After restarting, you can run the cell again and verify you've got the right versions of

torchandtorchvision.

Now we'll continue with the regular imports, setting up device agnostic code and this time we'll also get the helper_functions.py script from GitHub.

The helper_functions.py script contains several functions we created in previous sections:

set_seeds()to set the random seeds (created in 07. PyTorch Experiment Tracking section 0).download_data()to download a data source given a link (created in 07. PyTorch Experiment Tracking section 1).plot_loss_curves()to inspect our model's training results (created in 04. PyTorch Custom Datasets section 7.8)

Note: It may be a better idea for many of the functions in the

helper_functions.pyscript to be merged intogoing_modular/going_modular/utils.py, perhaps that's an extension you'd like to try.

# Continue with regular imports

import matplotlib.pyplot as plt

import torch

import torchvision

from torch import nn

from torchvision import transforms

# Try to get torchinfo, install it if it doesn't work

try:

from torchinfo import summary

except:

print("[INFO] Couldn't find torchinfo... installing it.")

!pip install -q torchinfo

from torchinfo import summary

# Try to import the going_modular directory, download it from GitHub if it doesn't work

try:

from going_modular.going_modular import data_setup, engine

from helper_functions import download_data, set_seeds, plot_loss_curves

except:

# Get the going_modular scripts

print("[INFO] Couldn't find going_modular or helper_functions scripts... downloading them from GitHub.")

!git clone https://github.com/mrdbourke/pytorch-deep-learning

!mv pytorch-deep-learning/going_modular .

!mv pytorch-deep-learning/helper_functions.py . # get the helper_functions.py script

!rm -rf pytorch-deep-learning

from going_modular.going_modular import data_setup, engine

from helper_functions import download_data, set_seeds, plot_loss_curves

Finally, we'll setup device-agnostic code to make sure our models run on the GPU.

device = "cuda" if torch.cuda.is_available() else "cpu"

device

'cuda'

1. Getting data¶

We left off in 08. PyTorch Paper Replicating comparing our own Vision Transformer (ViT) feature extractor model to the EfficientNetB2 (EffNetB2) feature extractor model we created in 07. PyTorch Experiment Tracking.

And we found that there was a slight difference in the comparison.

The EffNetB2 model was trained on 20% of the pizza, steak and sushi data from Food101 where as the ViT model was trained on 10%.

Since our goal is to deploy the best model for our FoodVision Mini problem, let's start by downloading the 20% pizza, steak and sushi dataset and train an EffNetB2 feature extractor and ViT feature extractor on it and then compare the two models.

This way we'll be comparing apples to apples (one model trained on a dataset to another model trained on the same dataset).

Note: The dataset we're downloading is a sample of the entire Food101 dataset (101 food classes with 1,000 images each). More specifically, 20% refers to 20% of images from the pizza, steak and sushi classes selected at random. You can see how this dataset was created in

extras/04_custom_data_creation.ipynband more details in 04. PyTorch Custom Datasets section 1.

We can download the data using the download_data() function we created in 07. PyTorch Experiment Tracking section 1 from helper_functions.py.

# Download pizza, steak, sushi images from GitHub

data_20_percent_path = download_data(source="https://github.com/mrdbourke/pytorch-deep-learning/raw/main/data/pizza_steak_sushi_20_percent.zip",

destination="pizza_steak_sushi_20_percent")

data_20_percent_path

[INFO] data/pizza_steak_sushi_20_percent directory exists, skipping download.

PosixPath('data/pizza_steak_sushi_20_percent')

Wonderful!

Now we've got a dataset, let's create training and test paths.

# Setup directory paths to train and test images

train_dir = data_20_percent_path / "train"

test_dir = data_20_percent_path / "test"

2. FoodVision Mini model deployment experiment outline¶

The ideal deployed model FoodVision Mini performs well and fast.

We'd like our model to perform as close to real-time as possible.

Real-time in this case being ~30FPS (frames per second) because that's about how fast the human eye can see (there is debate on this but let's just use ~30FPS as our benchmark).

And for classifying three different classes (pizza, steak and sushi), we'd like a model that performs at 95%+ accuracy.

Of course, higher accuracy would be nice but this might sacrifice speed.

So our goals are:

- Performance - A model that performs at 95%+ accuracy.

- Speed - A model that can classify an image at ~30FPS (0.03 seconds inference time per image, also known as latency).

FoodVision Mini deployment goals. We'd like a fast predicting well-performing model (because a slow app is boring).

We'll put an emphasis on speed, meaning, we'd prefer a model performing at 90%+ accuracy at ~30FPS than a model performing 95%+ accuracy at 10FPS.

To try and achieve these results, let's bring in our best performing models from the previous sections:

- EffNetB2 feature extractor (EffNetB2 for short) - originally created in 07. PyTorch Experiment Tracking section 7.5 using

torchvision.models.efficientnet_b2()with adjustedclassifierlayers. - ViT-B/16 feature extractor (ViT for short) - originally created in 08. PyTorch Paper Replicating section 10 using

torchvision.models.vit_b_16()with adjustedheadlayers.- Note ViT-B/16 stands for "Vision Transformer Base, patch size 16".

Note: A "feature extractor model" often starts with a model that has been pretrained on a dataset similar to your own problem. The pretrained model's base layers are often left frozen (the pretrained patterns/weights stay the same) whilst some of the top (or classifier/classification head) layers get customized to your own problem by training on your own data. We covered the concept of a feature extractor model in 06. PyTorch Transfer Learning section 3.4.

3. Creating an EffNetB2 feature extractor¶

We first created an EffNetB2 feature extractor model in 07. PyTorch Experiment Tracking section 7.5.

And by the end of that section we saw it performed very well.

So let's now recreate it here so we can compare its results to a ViT feature extractor trained on the same data.

To do so we can:

- Setup the pretrained weights as

weights=torchvision.models.EfficientNet_B2_Weights.DEFAULT, where "DEFAULT" means "best currently available" (or could useweights="DEFAULT"). - Get the pretrained model image transforms from the weights with the

transforms()method (we need these so we can convert our images into the same format as the pretrained EffNetB2 was trained on). - Create a pretrained model instance by passing the weights to an instance of

torchvision.models.efficientnet_b2. - Freeze the base layers in the model.

- Update the classifier head to suit our own data.

# 1. Setup pretrained EffNetB2 weights

effnetb2_weights = torchvision.models.EfficientNet_B2_Weights.DEFAULT

# 2. Get EffNetB2 transforms

effnetb2_transforms = effnetb2_weights.transforms()

# 3. Setup pretrained model

effnetb2 = torchvision.models.efficientnet_b2(weights=effnetb2_weights) # could also use weights="DEFAULT"

# 4. Freeze the base layers in the model (this will freeze all layers to begin with)

for param in effnetb2.parameters():

param.requires_grad = False

Now to change the classifier head, let's first inspect it using the classifier attribute of our model.

# Check out EffNetB2 classifier head

effnetb2.classifier

Sequential( (0): Dropout(p=0.3, inplace=True) (1): Linear(in_features=1408, out_features=1000, bias=True) )

Excellent! To change the classifier head to suit our own problem, let's replace the out_features variable with the same number of classes we have (in our case, out_features=3, one for pizza, steak, sushi).

Note: This process of changing the output layers/classifier head will be dependent on the problem you're working on. For example, if you wanted a different number of outputs or a different kind of output, you would have to change the output layers accordingly.

# 5. Update the classifier head

effnetb2.classifier = nn.Sequential(

nn.Dropout(p=0.3, inplace=True), # keep dropout layer same

nn.Linear(in_features=1408, # keep in_features same

out_features=3)) # change out_features to suit our number of classes

Beautiful!

3.1 Creating a function to make an EffNetB2 feature extractor¶

Looks like our EffNetB2 feature extractor is ready to go, however, since there's quite a few steps involved here, how about we turn the code above into a function we can re-use later?

We'll call it create_effnetb2_model() and it'll take a customizable number of classes and a random seed parameter for reproducibility.

Ideally, it will return an EffNetB2 feature extractor along with its associated transforms.

def create_effnetb2_model(num_classes:int=3,

seed:int=42):

"""Creates an EfficientNetB2 feature extractor model and transforms.

Args:

num_classes (int, optional): number of classes in the classifier head.

Defaults to 3.

seed (int, optional): random seed value. Defaults to 42.

Returns:

model (torch.nn.Module): EffNetB2 feature extractor model.

transforms (torchvision.transforms): EffNetB2 image transforms.

"""

# 1, 2, 3. Create EffNetB2 pretrained weights, transforms and model

weights = torchvision.models.EfficientNet_B2_Weights.DEFAULT

transforms = weights.transforms()

model = torchvision.models.efficientnet_b2(weights=weights)

# 4. Freeze all layers in base model

for param in model.parameters():

param.requires_grad = False

# 5. Change classifier head with random seed for reproducibility

torch.manual_seed(seed)

model.classifier = nn.Sequential(

nn.Dropout(p=0.3, inplace=True),

nn.Linear(in_features=1408, out_features=num_classes),

)

return model, transforms

Woohoo! That's a nice looking function, let's try it out.

effnetb2, effnetb2_transforms = create_effnetb2_model(num_classes=3,

seed=42)

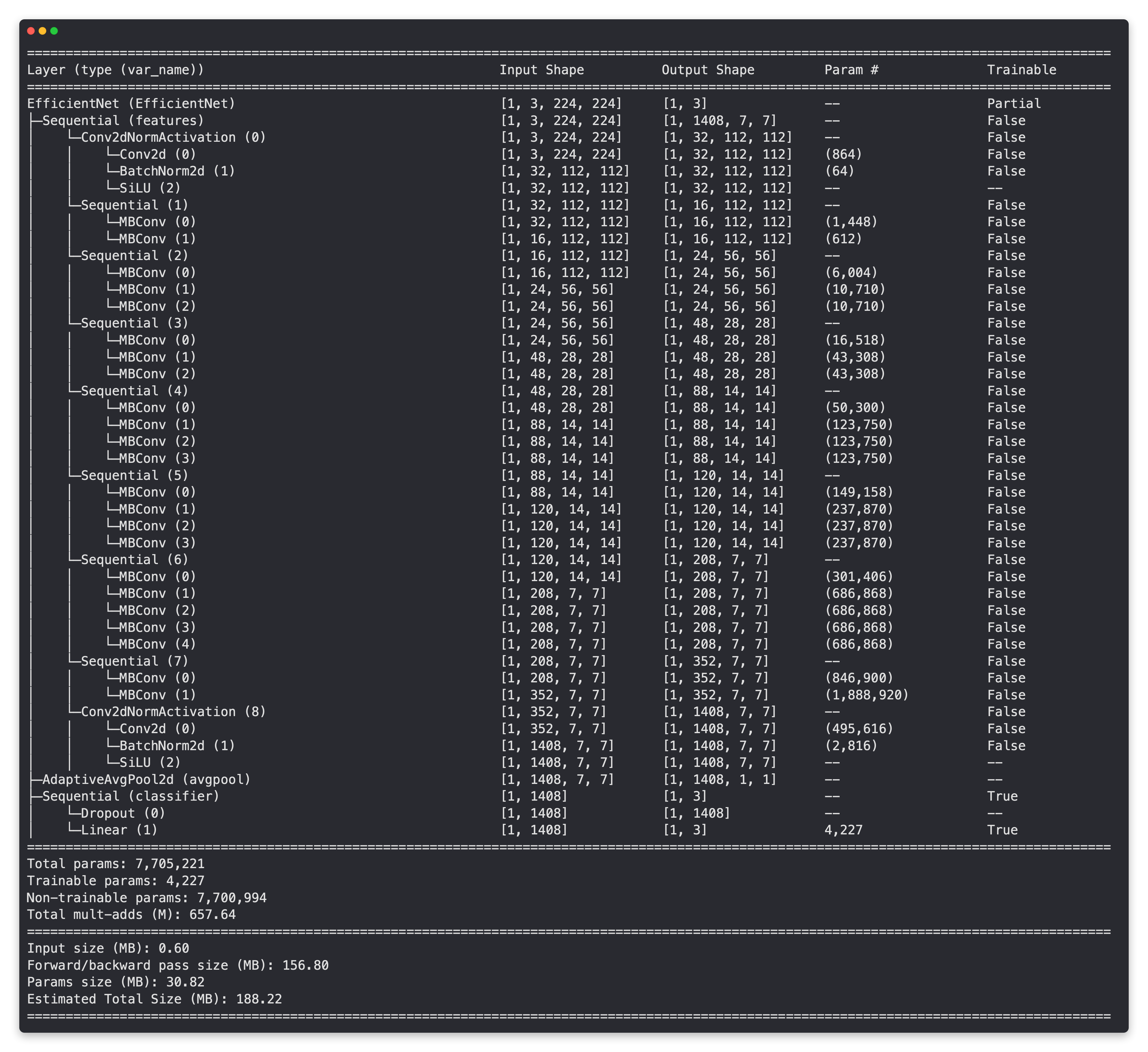

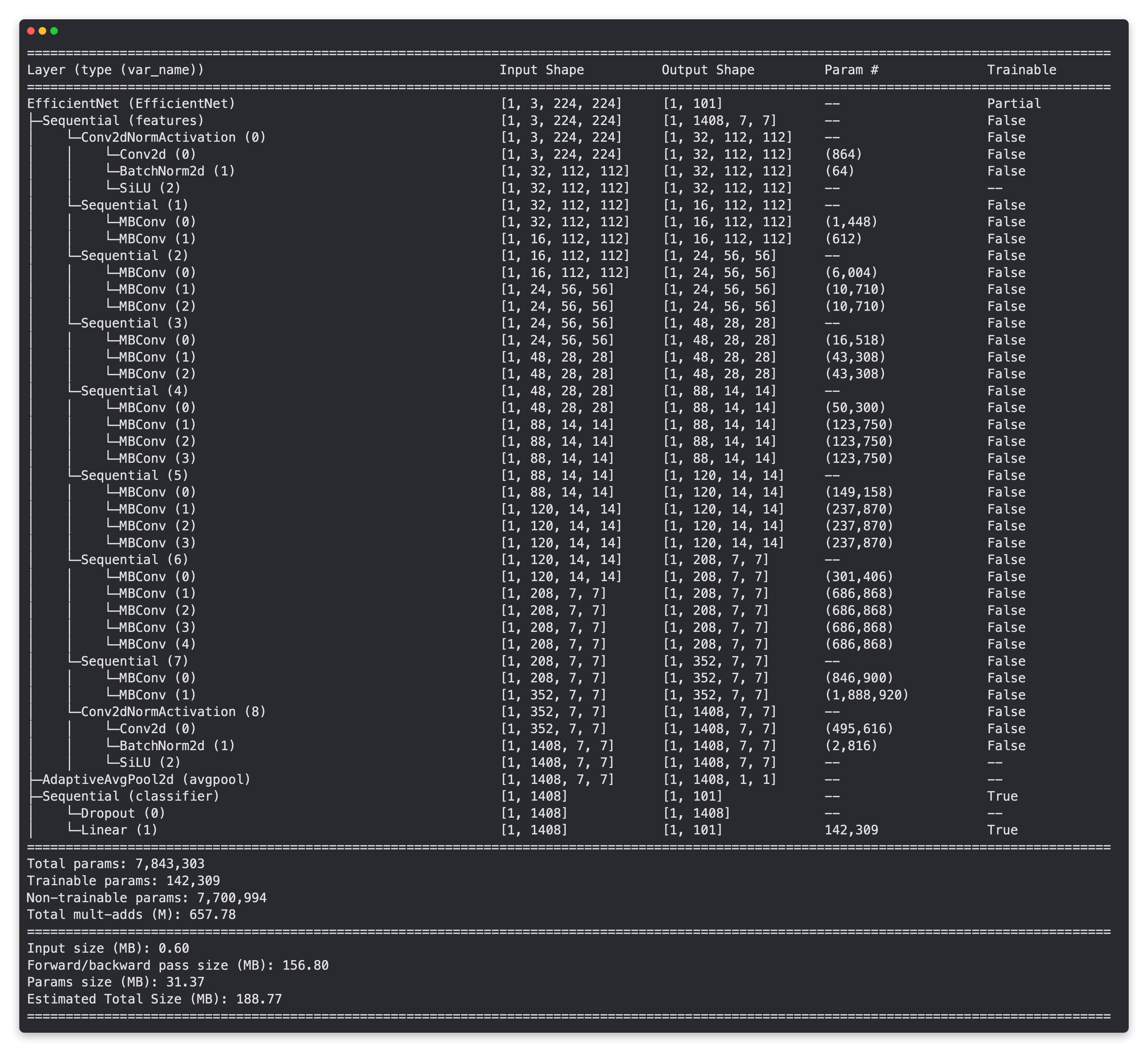

No errors, nice, now to really try it out, let's get a summary with torchinfo.summary().

from torchinfo import summary

# # Print EffNetB2 model summary (uncomment for full output)

# summary(effnetb2,

# input_size=(1, 3, 224, 224),

# col_names=["input_size", "output_size", "num_params", "trainable"],

# col_width=20,

# row_settings=["var_names"])

Base layers frozen, top layers trainable and customized!

3.2 Creating DataLoaders for EffNetB2¶

Our EffNetB2 feature extractor is ready, time to create some DataLoaders.

We can do this by using the data_setup.create_dataloaders() function we created in 05. PyTorch Going Modular section 2.

We'll use a batch_size of 32 and transform our images using the effnetb2_transforms so they're in the same format that our effnetb2 model was trained on.

# Setup DataLoaders

from going_modular.going_modular import data_setup

train_dataloader_effnetb2, test_dataloader_effnetb2, class_names = data_setup.create_dataloaders(train_dir=train_dir,

test_dir=test_dir,

transform=effnetb2_transforms,

batch_size=32)

3.3 Training EffNetB2 feature extractor¶

Model ready, DataLoaders ready, let's train!

Just like in 07. PyTorch Experiment Tracking section 7.6, ten epochs should be enough to get good results.

We can do so by creating an optimizer (we'll use torch.optim.Adam() with a learning rate of 1e-3), a loss function (we'll use torch.nn.CrossEntropyLoss() for multi-class classification) and then passing these as well as our DataLoaders to the engine.train() function we created in 05. PyTorch Going Modular section 4.

from going_modular.going_modular import engine

# Setup optimizer

optimizer = torch.optim.Adam(params=effnetb2.parameters(),

lr=1e-3)

# Setup loss function

loss_fn = torch.nn.CrossEntropyLoss()

# Set seeds for reproducibility and train the model

set_seeds()

effnetb2_results = engine.train(model=effnetb2,

train_dataloader=train_dataloader_effnetb2,

test_dataloader=test_dataloader_effnetb2,

epochs=10,

optimizer=optimizer,

loss_fn=loss_fn,

device=device)

0%| | 0/10 [00:00<?, ?it/s]

Epoch: 1 | train_loss: 0.9856 | train_acc: 0.5604 | test_loss: 0.7408 | test_acc: 0.9347 Epoch: 2 | train_loss: 0.7175 | train_acc: 0.8438 | test_loss: 0.5869 | test_acc: 0.9409 Epoch: 3 | train_loss: 0.5876 | train_acc: 0.8917 | test_loss: 0.4909 | test_acc: 0.9500 Epoch: 4 | train_loss: 0.4474 | train_acc: 0.9062 | test_loss: 0.4355 | test_acc: 0.9409 Epoch: 5 | train_loss: 0.4290 | train_acc: 0.9104 | test_loss: 0.3915 | test_acc: 0.9443 Epoch: 6 | train_loss: 0.4381 | train_acc: 0.8896 | test_loss: 0.3512 | test_acc: 0.9688 Epoch: 7 | train_loss: 0.4245 | train_acc: 0.8771 | test_loss: 0.3268 | test_acc: 0.9563 Epoch: 8 | train_loss: 0.3897 | train_acc: 0.8958 | test_loss: 0.3457 | test_acc: 0.9381 Epoch: 9 | train_loss: 0.3749 | train_acc: 0.8812 | test_loss: 0.3129 | test_acc: 0.9131 Epoch: 10 | train_loss: 0.3757 | train_acc: 0.8604 | test_loss: 0.2813 | test_acc: 0.9688

3.4 Inspecting EffNetB2 loss curves¶

Nice!

As we saw in 07. PyTorch Experiment Tracking, the EffNetB2 feature extractor model works quite well on our data.

Let's turn its results into loss curves to inspect them further.

Note: Loss curves are one of the best ways to visualize how your model's performing. For more on loss curves, check out 04. PyTorch Custom Datasets section 8: What should an ideal loss curve look like?

from helper_functions import plot_loss_curves

plot_loss_curves(effnetb2_results)

Woah!

Those are some nice looking loss curves.

It looks like our model is performing quite well and perhaps would benefit from a little longer training and potentially some data augmentation (to help prevent potential overfitting occurring from longer training).

3.5 Saving EffNetB2 feature extractor¶

Now we've got a well-performing trained model, let's save it to file so we can import and use it later.

To save our model we can use the utils.save_model() function we created in 05. PyTorch Going Modular section 5.

We'll set the target_dir to "models" and the model_name to "09_pretrained_effnetb2_feature_extractor_pizza_steak_sushi_20_percent.pth" (a little comprehensive but at least we know what's going on).

from going_modular.going_modular import utils

# Save the model

utils.save_model(model=effnetb2,

target_dir="models",

model_name="09_pretrained_effnetb2_feature_extractor_pizza_steak_sushi_20_percent.pth")

[INFO] Saving model to: models/09_pretrained_effnetb2_feature_extractor_pizza_steak_sushi_20_percent.pth

3.6 Checking the size of EffNetB2 feature extractor¶

Since one of our criteria for deploying a model to power FoodVision Mini is speed (~30FPS or better), let's check the size of our model.

Why check the size?

Well, while not always the case, the size of a model can influence its inference speed.

As in, if a model has more parameters, it generally performs more operations and each one of these operations requires some computing power.

And because we'd like our model to work on devices with limited computing power (e.g. on a mobile device or in a web browser), generally, the smaller the size the better (as long as it still performs well in terms of accuracy).

To check our model's size in bytes, we can use Python's pathlib.Path.stat("path_to_model").st_size and then we can convert it (roughly) to megabytes by dividing it by (1024*1024).

from pathlib import Path

# Get the model size in bytes then convert to megabytes

pretrained_effnetb2_model_size = Path("models/09_pretrained_effnetb2_feature_extractor_pizza_steak_sushi_20_percent.pth").stat().st_size // (1024*1024) # division converts bytes to megabytes (roughly)

print(f"Pretrained EffNetB2 feature extractor model size: {pretrained_effnetb2_model_size} MB")

Pretrained EffNetB2 feature extractor model size: 29 MB

3.7 Collecting EffNetB2 feature extractor stats¶

We've got a few statistics about our EffNetB2 feature extractor model such as test loss, test accuracy and model size, how about we collect them all in a dictionary so we can compare them to the upcoming ViT feature extractor.

And we'll calculate an extra one for fun, total number of parameters.

We can do so by counting the number of elements (or patterns/weights) in effnetb2.parameters(). We'll access the number of elements in each parameter using the torch.numel() (short for "number of elements") method.

# Count number of parameters in EffNetB2

effnetb2_total_params = sum(torch.numel(param) for param in effnetb2.parameters())

effnetb2_total_params

7705221

Excellent!

Now let's put everything in a dictionary so we can make comparisons later on.

# Create a dictionary with EffNetB2 statistics

effnetb2_stats = {"test_loss": effnetb2_results["test_loss"][-1],

"test_acc": effnetb2_results["test_acc"][-1],

"number_of_parameters": effnetb2_total_params,

"model_size (MB)": pretrained_effnetb2_model_size}

effnetb2_stats

{'test_loss': 0.28128674924373626,

'test_acc': 0.96875,

'number_of_parameters': 7705221,

'model_size (MB)': 29}

Epic!

Looks like our EffNetB2 model is performing at over 95% accuracy!

Criteria number 1: perform at 95%+ accuracy, tick!

4. Creating a ViT feature extractor¶

Time to continue with our FoodVision Mini modelling experiments.

This time we're going to create a ViT feature extractor.

And we'll do it in much the same way as the EffNetB2 feature extractor except this time with torchvision.models.vit_b_16() instead of torchvision.models.efficientnet_b2().

We'll start by creating a function called create_vit_model() which will be very similar to create_effnetb2_model() except of course returning a ViT feature extractor model and transforms rather than EffNetB2.

Another slight difference is that torchvision.models.vit_b_16()'s output layer is called heads rather than classifier.

# Check out ViT heads layer

vit = torchvision.models.vit_b_16()

vit.heads

Sequential( (head): Linear(in_features=768, out_features=1000, bias=True) )

Knowing this, we've got all the pieces of the puzzle we need.

def create_vit_model(num_classes:int=3,

seed:int=42):

"""Creates a ViT-B/16 feature extractor model and transforms.

Args:

num_classes (int, optional): number of target classes. Defaults to 3.

seed (int, optional): random seed value for output layer. Defaults to 42.

Returns:

model (torch.nn.Module): ViT-B/16 feature extractor model.

transforms (torchvision.transforms): ViT-B/16 image transforms.

"""

# Create ViT_B_16 pretrained weights, transforms and model

weights = torchvision.models.ViT_B_16_Weights.DEFAULT

transforms = weights.transforms()

model = torchvision.models.vit_b_16(weights=weights)

# Freeze all layers in model

for param in model.parameters():

param.requires_grad = False

# Change classifier head to suit our needs (this will be trainable)

torch.manual_seed(seed)

model.heads = nn.Sequential(nn.Linear(in_features=768, # keep this the same as original model

out_features=num_classes)) # update to reflect target number of classes

return model, transforms

ViT feature extraction model creation function ready!

Let's test it out.

# Create ViT model and transforms

vit, vit_transforms = create_vit_model(num_classes=3,

seed=42)

No errors, lovely to see!

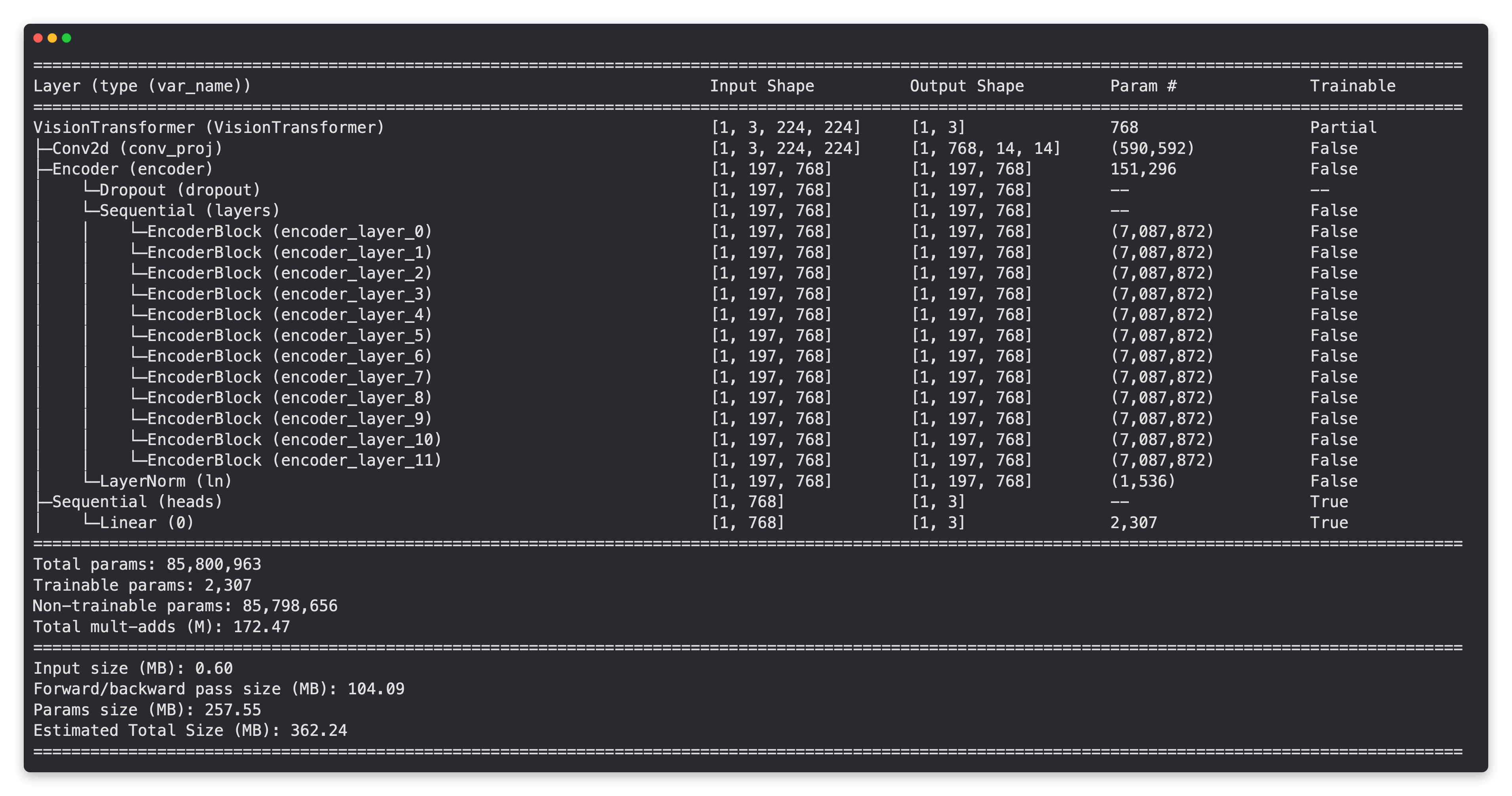

Now let's get a nice-looking summary of our ViT model using torchinfo.summary().

from torchinfo import summary

# # Print ViT feature extractor model summary (uncomment for full output)

# summary(vit,

# input_size=(1, 3, 224, 224),

# col_names=["input_size", "output_size", "num_params", "trainable"],

# col_width=20,

# row_settings=["var_names"])

Just like our EffNetB2 feature extractor model, our ViT model's base layers are frozen and the output layer is customized to our needs!

Do you notice the big difference though?

Our ViT model has far more parameters than our EffNetB2 model. Perhaps this will come into play when we compare our models across speed and performance later on.

4.1 Create DataLoaders for ViT¶

We've got our ViT model ready, now let's create some DataLoaders for it.

We'll do this in the same way we did for EffNetB2 except we'll use vit_transforms to transform our images into the same format the ViT model was trained on.

# Setup ViT DataLoaders

from going_modular.going_modular import data_setup

train_dataloader_vit, test_dataloader_vit, class_names = data_setup.create_dataloaders(train_dir=train_dir,

test_dir=test_dir,

transform=vit_transforms,

batch_size=32)

4.2 Training ViT feature extractor¶

You know what time it is...

...it's traininggggggg time (sung in the same tune as the song Closing Time).

Let's train our ViT feature extractor model for 10 epochs using our engine.train() function with torch.optim.Adam() and a learning rate of 1e-3 as our optimizer and torch.nn.CrossEntropyLoss() as our loss function.

We'll use our set_seeds() function before training to try and make our results as reproducible as possible.

from going_modular.going_modular import engine

# Setup optimizer

optimizer = torch.optim.Adam(params=vit.parameters(),

lr=1e-3)

# Setup loss function

loss_fn = torch.nn.CrossEntropyLoss()

# Train ViT model with seeds set for reproducibility

set_seeds()

vit_results = engine.train(model=vit,

train_dataloader=train_dataloader_vit,

test_dataloader=test_dataloader_vit,

epochs=10,

optimizer=optimizer,

loss_fn=loss_fn,

device=device)

0%| | 0/10 [00:00<?, ?it/s]

Epoch: 1 | train_loss: 0.7023 | train_acc: 0.7500 | test_loss: 0.2714 | test_acc: 0.9290 Epoch: 2 | train_loss: 0.2531 | train_acc: 0.9104 | test_loss: 0.1669 | test_acc: 0.9602 Epoch: 3 | train_loss: 0.1766 | train_acc: 0.9542 | test_loss: 0.1270 | test_acc: 0.9693 Epoch: 4 | train_loss: 0.1277 | train_acc: 0.9625 | test_loss: 0.1072 | test_acc: 0.9722 Epoch: 5 | train_loss: 0.1163 | train_acc: 0.9646 | test_loss: 0.0950 | test_acc: 0.9784 Epoch: 6 | train_loss: 0.1270 | train_acc: 0.9375 | test_loss: 0.0830 | test_acc: 0.9722 Epoch: 7 | train_loss: 0.0899 | train_acc: 0.9771 | test_loss: 0.0844 | test_acc: 0.9784 Epoch: 8 | train_loss: 0.0928 | train_acc: 0.9812 | test_loss: 0.0759 | test_acc: 0.9722 Epoch: 9 | train_loss: 0.0933 | train_acc: 0.9792 | test_loss: 0.0729 | test_acc: 0.9784 Epoch: 10 | train_loss: 0.0662 | train_acc: 0.9833 | test_loss: 0.0642 | test_acc: 0.9847

4.3 Inspecting ViT loss curves¶

Alright, alright, alright, ViT model trained, let's get visual and see some loss curves.

Note: Don't forget you can see what an ideal set of loss curves should look like in 04. PyTorch Custom Datasets section 8.

from helper_functions import plot_loss_curves

plot_loss_curves(vit_results)

Ohh yeah!

Those are some nice looking loss curves. Just like our EffNetB2 feature extractor model, it looks our ViT model might benefit from a little longer training time and perhaps some data augmentation (to help prevent overfitting).

4.4 Saving ViT feature extractor¶

Our ViT model is performing outstanding!

So let's save it to file so we can import it and use it later if we wish.

We can do so using the utils.save_model() function we created in 05. PyTorch Going Modular section 5.

# Save the model

from going_modular.going_modular import utils

utils.save_model(model=vit,

target_dir="models",

model_name="09_pretrained_vit_feature_extractor_pizza_steak_sushi_20_percent.pth")

[INFO] Saving model to: models/09_pretrained_vit_feature_extractor_pizza_steak_sushi_20_percent.pth

4.5 Checking the size of ViT feature extractor¶

And since we want to compare our EffNetB2 model to our ViT model across a number of characteristics, let's find out its size.

To check our model's size in bytes, we can use Python's pathlib.Path.stat("path_to_model").st_size and then we can convert it (roughly) to megabytes by dividing it by (1024*1024).

from pathlib import Path

# Get the model size in bytes then convert to megabytes

pretrained_vit_model_size = Path("models/09_pretrained_vit_feature_extractor_pizza_steak_sushi_20_percent.pth").stat().st_size // (1024*1024) # division converts bytes to megabytes (roughly)

print(f"Pretrained ViT feature extractor model size: {pretrained_vit_model_size} MB")

Pretrained ViT feature extractor model size: 327 MB

Hmm, how does the ViT feature extractor model size compare to our EffNetB2 model size?

We'll find this out shortly when we compare all of our model's characteristics.

4.6 Collecting ViT feature extractor stats¶

Let's put together all of our ViT feature extractor model statistics.

We saw it in the summary output above but we'll calculate its total number of parameters.

# Count number of parameters in ViT

vit_total_params = sum(torch.numel(param) for param in vit.parameters())

vit_total_params

85800963

Woah, that looks like a fair bit more than our EffNetB2!

Note: A larger number of parameters (or weights/patterns) generally means a model has a higher capacity to learn, whether it actually uses this extra capacity is another story. In light of this, our EffNetB2 model has 7,705,221 parameters where as our ViT model has 85,800,963 (11.1x more) so we could assume that our ViT model has more of a capacity to learn, if given more data (more opportunities to learn). However, this larger capacity to learn ofen comes with an increased model filesize and a longer time to perform inference.

Now let's create a dictionary with some important characteristics of our ViT model.

# Create ViT statistics dictionary

vit_stats = {"test_loss": vit_results["test_loss"][-1],

"test_acc": vit_results["test_acc"][-1],

"number_of_parameters": vit_total_params,

"model_size (MB)": pretrained_vit_model_size}

vit_stats

{'test_loss': 0.06418210905976593,

'test_acc': 0.984659090909091,

'number_of_parameters': 85800963,

'model_size (MB)': 327}

Nice! Looks like our ViT model achieves over 95% accuracy too.

5. Making predictions with our trained models and timing them¶

We've got a couple of trained models, both performing pretty well.

Now how about we test them out doing what we'd like them to do?

As in, let's see how they go making predictions (performing inference).

We know both of our models are performing at over 95% accuracy on the test dataset, but how fast are they?

Ideally, if we're deploying our FoodVision Mini model to a mobile device so people can take photos of their food and identify it, we'd like the predictions to happen at real-time (~30 frames per second).

That's why our second criteria is: a fast model.

To find out how long each of our models take to performance inference, let's create a function called pred_and_store() to iterate over each of the test dataset images one by one and perform a prediction.

We'll time each of the predictions as well as store the results in a common prediction format: a list of dictionaries (where each element in the list is a single prediction and each sinlge prediction is a dictionary).

Note: We time the predictions one by one rather than by batch because when our model is deployed, it will likely only be making a prediction on one image at a time. As in, someone takes a photo and our model predicts on that single image.

Since we'd like to make predictions across all the images in the test set, let's first get a list of all of the test image paths so we can iterate over them.

To do so, we'll use Python's pathlib.Path("target_dir").glob("*/*.jpg")) to find all of the filepaths in a target directory with the extension .jpg (all of our test images).

from pathlib import Path

# Get all test data paths

print(f"[INFO] Finding all filepaths ending with '.jpg' in directory: {test_dir}")

test_data_paths = list(Path(test_dir).glob("*/*.jpg"))

test_data_paths[:5]

[INFO] Finding all filepaths ending with '.jpg' in directory: data/pizza_steak_sushi_20_percent/test

[PosixPath('data/pizza_steak_sushi_20_percent/test/steak/831681.jpg'),

PosixPath('data/pizza_steak_sushi_20_percent/test/steak/3100563.jpg'),

PosixPath('data/pizza_steak_sushi_20_percent/test/steak/2752603.jpg'),

PosixPath('data/pizza_steak_sushi_20_percent/test/steak/39461.jpg'),

PosixPath('data/pizza_steak_sushi_20_percent/test/steak/730464.jpg')]

5.1 Creating a function to make predictions across the test dataset¶

Now we've got a list of our test image paths, let's get to work on our pred_and_store() function:

- Create a function that takes a list of paths, a trained PyTorch model, a series of transforms (to prepare images), a list of target class names and a target device.

- Create an empty list to store prediction dictionaries (we want the function to return a list of dictionaries, one for each prediction).

- Loop through the target input paths (steps 4-14 will happen inside the loop).

- Create an empty dictionary for each iteration in the loop to store prediction values per sample.

- Get the sample path and ground truth class name (we can do this by inferring the class from the path).

- Start the prediction timer using Python's

timeit.default_timer(). - Open the image using

PIL.Image.open(path). - Transform the image so it's capable of being used with the target model as well as add a batch dimension and send the image to the target device.

- Prepare the model for inference by sending it to the target device and turning on

eval()mode. - Turn on

torch.inference_mode()and pass the target transformed image to the model and calculate the prediction probability usingtorch.softmax()and the target label usingtorch.argmax(). - Add the prediction probability and prediction class to the prediction dictionary created in step 4. Also make sure the prediction probability is on the CPU so it can be used with non-GPU libraries such as NumPy and pandas for later inspection.

- End the prediction timer started in step 6 and add the time to the prediction dictionary created in step 4.

- See if the predicted class matches the ground truth class from step 5 and add the result to the prediction dictionary created in step 4.

- Append the updated prediction dictionary to the empty list of predictions created in step 2.

- Return the list of prediction dictionaries.

A bunch of steps, but nothing we can't handle!

Let's do it.

import pathlib

import torch

from PIL import Image

from timeit import default_timer as timer

from tqdm.auto import tqdm

from typing import List, Dict

# 1. Create a function to return a list of dictionaries with sample, truth label, prediction, prediction probability and prediction time

def pred_and_store(paths: List[pathlib.Path],

model: torch.nn.Module,

transform: torchvision.transforms,

class_names: List[str],

device: str = "cuda" if torch.cuda.is_available() else "cpu") -> List[Dict]:

# 2. Create an empty list to store prediction dictionaries

pred_list = []

# 3. Loop through target paths

for path in tqdm(paths):

# 4. Create empty dictionary to store prediction information for each sample

pred_dict = {}

# 5. Get the sample path and ground truth class name

pred_dict["image_path"] = path

class_name = path.parent.stem

pred_dict["class_name"] = class_name

# 6. Start the prediction timer

start_time = timer()

# 7. Open image path

img = Image.open(path)

# 8. Transform the image, add batch dimension and put image on target device

transformed_image = transform(img).unsqueeze(0).to(device)

# 9. Prepare model for inference by sending it to target device and turning on eval() mode

model.to(device)

model.eval()

# 10. Get prediction probability, predicition label and prediction class

with torch.inference_mode():

pred_logit = model(transformed_image) # perform inference on target sample

pred_prob = torch.softmax(pred_logit, dim=1) # turn logits into prediction probabilities

pred_label = torch.argmax(pred_prob, dim=1) # turn prediction probabilities into prediction label

pred_class = class_names[pred_label.cpu()] # hardcode prediction class to be on CPU

# 11. Make sure things in the dictionary are on CPU (required for inspecting predictions later on)

pred_dict["pred_prob"] = round(pred_prob.unsqueeze(0).max().cpu().item(), 4)

pred_dict["pred_class"] = pred_class

# 12. End the timer and calculate time per pred

end_time = timer()

pred_dict["time_for_pred"] = round(end_time-start_time, 4)

# 13. Does the pred match the true label?

pred_dict["correct"] = class_name == pred_class

# 14. Add the dictionary to the list of preds

pred_list.append(pred_dict)

# 15. Return list of prediction dictionaries

return pred_list

Ho, ho!

What a good looking function!

And you know what, since our pred_and_store() is a pretty good utility function for making and storing predictions, it could be stored to going_modular.going_modular.predictions.py for later use. That might be an extension you'd like to try, check out 05. PyTorch Going Modular for ideas.

5.2 Making and timing predictions with EffNetB2¶

Time to test out our pred_and_store() function!

Let's start by using it to make predictions across the test dataset with our EffNetB2 model, paying attention to two details:

- Device - We'll hard code the

deviceparameter to use"cpu"because when we deploy our model, we won't always have access to a"cuda"(GPU) device.- Making the predictions on CPU will be a good indicator of speed of inference too because generally predictions on CPU devices are slower than GPU devices.

- Transforms - We'll also be sure to set the

transformparameter toeffnetb2_transformsto make sure the images are opened and transformed in the same way oureffnetb2model has been trained on.

# Make predictions across test dataset with EffNetB2

effnetb2_test_pred_dicts = pred_and_store(paths=test_data_paths,

model=effnetb2,

transform=effnetb2_transforms,

class_names=class_names,

device="cpu") # make predictions on CPU

0%| | 0/150 [00:00<?, ?it/s]

Nice! Look at those predictions fly!

Let's inspect the first couple and see what they look like.

# Inspect the first 2 prediction dictionaries

effnetb2_test_pred_dicts[:2]

[{'image_path': PosixPath('data/pizza_steak_sushi_20_percent/test/steak/831681.jpg'),

'class_name': 'steak',

'pred_prob': 0.9293,

'pred_class': 'steak',

'time_for_pred': 0.0494,

'correct': True},

{'image_path': PosixPath('data/pizza_steak_sushi_20_percent/test/steak/3100563.jpg'),

'class_name': 'steak',

'pred_prob': 0.9534,

'pred_class': 'steak',

'time_for_pred': 0.0264,

'correct': True}]

Woohoo!

It looks like our pred_and_store() function worked nicely.

Thanks to our list of dictionaries data structure, we've got plenty of useful information we can further inspect.

To do so, let's turn our list of dictionaries into a pandas DataFrame.

# Turn the test_pred_dicts into a DataFrame

import pandas as pd

effnetb2_test_pred_df = pd.DataFrame(effnetb2_test_pred_dicts)

effnetb2_test_pred_df.head()

| image_path | class_name | pred_prob | pred_class | time_for_pred | correct | |

|---|---|---|---|---|---|---|

| 0 | data/pizza_steak_sushi_20_percent/test/steak/8... | steak | 0.9293 | steak | 0.0494 | True |

| 1 | data/pizza_steak_sushi_20_percent/test/steak/3... | steak | 0.9534 | steak | 0.0264 | True |

| 2 | data/pizza_steak_sushi_20_percent/test/steak/2... | steak | 0.7532 | steak | 0.0256 | True |

| 3 | data/pizza_steak_sushi_20_percent/test/steak/3... | steak | 0.5935 | steak | 0.0263 | True |

| 4 | data/pizza_steak_sushi_20_percent/test/steak/7... | steak | 0.8959 | steak | 0.0269 | True |

Beautiful!

Look how easily those prediction dictionaries turn into a structured format we can perform analysis on.

Such as finding how many predictions our EffNetB2 model got wrong...

# Check number of correct predictions

effnetb2_test_pred_df.correct.value_counts()

True 145 False 5 Name: correct, dtype: int64

Five wrong predictions out of 150 total, not bad!

And how about the average prediction time?

# Find the average time per prediction

effnetb2_average_time_per_pred = round(effnetb2_test_pred_df.time_for_pred.mean(), 4)

print(f"EffNetB2 average time per prediction: {effnetb2_average_time_per_pred} seconds")

EffNetB2 average time per prediction: 0.0269 seconds

Hmm, how does that average prediction time live up to our criteria of our model performing at real-time (~30FPS or 0.03 seconds per prediction)?

Note: Prediction times will be different across different hardware types (e.g. a local Intel i9 vs Google Colab CPU). The better and faster the hardware, generally, the faster the prediction. For example, on my local deep learning PC with an Intel i9 chip, my average prediction time with EffNetB2 is around 0.031 seconds (just under real-time). However, on Google Colab (I'm not sure what CPU hardware Colab uses but it looks like it might be an Intel(R) Xeon(R)), my average prediction time with EffNetB2 is about 0.1396 seconds (3-4x slower).

Let's add our EffNetB2 average time per prediction to our effnetb2_stats dictionary.

# Add EffNetB2 average prediction time to stats dictionary

effnetb2_stats["time_per_pred_cpu"] = effnetb2_average_time_per_pred

effnetb2_stats

{'test_loss': 0.28128674924373626,

'test_acc': 0.96875,

'number_of_parameters': 7705221,

'model_size (MB)': 29,

'time_per_pred_cpu': 0.0269}

5.3 Making and timing predictions with ViT¶

We've made predictions with our EffNetB2 model, now let's do the same for our ViT model.

To do so, we can use the pred_and_store() function we created above except this time we'll pass in our vit model as well as the vit_transforms.

And we'll keep the predictions on the CPU via device="cpu" (a natural extension here would be to test the prediction times on CPU and on GPU).

# Make list of prediction dictionaries with ViT feature extractor model on test images

vit_test_pred_dicts = pred_and_store(paths=test_data_paths,

model=vit,

transform=vit_transforms,

class_names=class_names,

device="cpu")

0%| | 0/150 [00:00<?, ?it/s]

Predictions made!

Now let's check out the first couple.

# Check the first couple of ViT predictions on the test dataset

vit_test_pred_dicts[:2]

[{'image_path': PosixPath('data/pizza_steak_sushi_20_percent/test/steak/831681.jpg'),

'class_name': 'steak',

'pred_prob': 0.9933,

'pred_class': 'steak',

'time_for_pred': 0.1313,

'correct': True},

{'image_path': PosixPath('data/pizza_steak_sushi_20_percent/test/steak/3100563.jpg'),

'class_name': 'steak',

'pred_prob': 0.9893,

'pred_class': 'steak',

'time_for_pred': 0.0638,

'correct': True}]

Wonderful!

And just like before, since our ViT model's predictions are in the form of a list of dictionaries, we can easily turn them into a pandas DataFrame for further inspection.

# Turn vit_test_pred_dicts into a DataFrame

import pandas as pd

vit_test_pred_df = pd.DataFrame(vit_test_pred_dicts)

vit_test_pred_df.head()

| image_path | class_name | pred_prob | pred_class | time_for_pred | correct | |

|---|---|---|---|---|---|---|

| 0 | data/pizza_steak_sushi_20_percent/test/steak/8... | steak | 0.9933 | steak | 0.1313 | True |

| 1 | data/pizza_steak_sushi_20_percent/test/steak/3... | steak | 0.9893 | steak | 0.0638 | True |

| 2 | data/pizza_steak_sushi_20_percent/test/steak/2... | steak | 0.9971 | steak | 0.0627 | True |

| 3 | data/pizza_steak_sushi_20_percent/test/steak/3... | steak | 0.7685 | steak | 0.0632 | True |

| 4 | data/pizza_steak_sushi_20_percent/test/steak/7... | steak | 0.9499 | steak | 0.0641 | True |

How many predictions did our ViT model get correct?

# Count the number of correct predictions

vit_test_pred_df.correct.value_counts()

True 148 False 2 Name: correct, dtype: int64

Woah!

Our ViT model did a little better than our EffNetB2 model in terms of correct predictions, only two samples wrong across the whole test dataset.

As an extension you might want to visualize the ViT model's wrong predictions and see if there's any reason why it might've got them wrong.

How about we calculate how long the ViT model took per prediction?

# Calculate average time per prediction for ViT model

vit_average_time_per_pred = round(vit_test_pred_df.time_for_pred.mean(), 4)

print(f"ViT average time per prediction: {vit_average_time_per_pred} seconds")

ViT average time per prediction: 0.0641 seconds

Well, that looks a little slower than our EffNetB2 model's average time per prediction but how does it look in terms of our second criteria: speed?

For now, let's add the value to our vit_stats dictionary so we can compare it to our EffNetB2 model's stats.

Note: The average time per prediction values will be highly dependent on the hardware you make them on. For example, for the ViT model, my average time per prediction (on the CPU) was 0.0693-0.0777 seconds on my local deep learning PC with an Intel i9 CPU. Where as on Google Colab, my average time per prediction with the ViT model was 0.6766-0.7113 seconds.

# Add average prediction time for ViT model on CPU

vit_stats["time_per_pred_cpu"] = vit_average_time_per_pred

vit_stats

{'test_loss': 0.06418210905976593,

'test_acc': 0.984659090909091,

'number_of_parameters': 85800963,

'model_size (MB)': 327,

'time_per_pred_cpu': 0.0641}

6. Comparing model results, prediction times and size¶

Our two best model contenders have been trained and evaluated.

Now let's put them head to head and compare across their different statistics.

To do so, let's turn our effnetb2_stats and vit_stats dictionaries into a pandas DataFrame.

We'll add a column to view the model names as well as convert the test accuracy to a whole percentage rather than decimal.

# Turn stat dictionaries into DataFrame

df = pd.DataFrame([effnetb2_stats, vit_stats])

# Add column for model names

df["model"] = ["EffNetB2", "ViT"]

# Convert accuracy to percentages

df["test_acc"] = round(df["test_acc"] * 100, 2)

df

| test_loss | test_acc | number_of_parameters | model_size (MB) | time_per_pred_cpu | model | |

|---|---|---|---|---|---|---|

| 0 | 0.281287 | 96.88 | 7705221 | 29 | 0.0269 | EffNetB2 |

| 1 | 0.064182 | 98.47 | 85800963 | 327 | 0.0641 | ViT |

Wonderful!

It seems our models are quite close in terms of overall test accuracy but how do they look across the other fields?

One way to find out would be to divide the ViT model statistics by the EffNetB2 model statistics to find out the different ratios between the models.

Let's create another DataFrame to do so.

# Compare ViT to EffNetB2 across different characteristics

pd.DataFrame(data=(df.set_index("model").loc["ViT"] / df.set_index("model").loc["EffNetB2"]), # divide ViT statistics by EffNetB2 statistics

columns=["ViT to EffNetB2 ratios"]).T

| test_loss | test_acc | number_of_parameters | model_size (MB) | time_per_pred_cpu | |

|---|---|---|---|---|---|

| ViT to EffNetB2 ratios | 0.228173 | 1.016412 | 11.135432 | 11.275862 | 2.3829 |

It seems our ViT model outperforms the EffNetB2 model across the performance metrics (test loss, where lower is better and test accuracy, where higher is better) but at the expense of having:

- 11x+ the number of parameters.

- 11x+ the model size.

- 2.5x+ the prediction time per image.

Are these tradeoffs worth it?

Perhaps if we had unlimited compute power but for our use case of deploying the FoodVision Mini model to a smaller device (e.g. a mobile phone), we'd likely start out with the EffNetB2 model for faster predictions at a slightly reduced performance but dramatically smaller size.

6.1 Visualizing the speed vs. performance tradeoff¶

We've seen that our ViT model outperforms our EffNetB2 model in terms of performance metrics such as test loss and test accuracy.

However, our EffNetB2 model performs predictions faster and has a much smaller model size.

Note: Performance or inference time is also often referred to as "latency".

How about we make this fact visual?

We can do so by creating a plot with matplotlib:

- Create a scatter plot from the comparison DataFrame to compare EffNetB2 and ViT

time_per_pred_cpuandtest_accvalues. - Add titles and labels respective of the data and customize the fontsize for aesthetics.

- Annotate the samples on the scatter plot from step 1 with their appropriate labels (the model names).

- Create a legend based on the model sizes (

model_size (MB)).

# 1. Create a plot from model comparison DataFrame

fig, ax = plt.subplots(figsize=(12, 8))

scatter = ax.scatter(data=df,

x="time_per_pred_cpu",

y="test_acc",

c=["blue", "orange"], # what colours to use?

s="model_size (MB)") # size the dots by the model sizes

# 2. Add titles, labels and customize fontsize for aesthetics

ax.set_title("FoodVision Mini Inference Speed vs Performance", fontsize=18)

ax.set_xlabel("Prediction time per image (seconds)", fontsize=14)

ax.set_ylabel("Test accuracy (%)", fontsize=14)

ax.tick_params(axis='both', labelsize=12)

ax.grid(True)

# 3. Annotate with model names

for index, row in df.iterrows():

ax.annotate(text=row["model"], # note: depending on your version of Matplotlib, you may need to use "s=..." or "text=...", see: https://github.com/faustomorales/keras-ocr/issues/183#issuecomment-977733270

xy=(row["time_per_pred_cpu"]+0.0006, row["test_acc"]+0.03),

size=12)

# 4. Create a legend based on model sizes

handles, labels = scatter.legend_elements(prop="sizes", alpha=0.5)

model_size_legend = ax.legend(handles,

labels,

loc="lower right",

title="Model size (MB)",

fontsize=12)

# Save the figure

!mdkir images/

plt.savefig("images/09-foodvision-mini-inference-speed-vs-performance.jpg")

# Show the figure

plt.show()

Woah!

The plot really visualizes the speed vs. performance tradeoff, in other words, when you have a larger, better performing deep model (like our ViT model), it generally takes longer to perform inference (higher latency).

There are exceptions to the rule and new research is being published all the time to help make larger models perform faster.

And it can be tempting to just deploy the best performing model but it's also good to take into consideration where the model is going to be performing.

In our case, the differences between our model's performance levels (on the test loss and test accuracy) aren't too extreme.

But since we'd like to put an emphasis on speed to begin with, we're going to stick with deploying EffNetB2 since it's faster and has a much smaller footprint.

Note: Prediction times will be different across different hardware types (e.g. Intel i9 vs Google Colab CPU vs GPU) so it's important to think about and test where your model is going to end up. Asking questions like "where is the model going to be run?" or "what is the ideal scenario for running the model?" and then running experiments to try and provide answers on your way to deployment is very helpful.

7. Bringing FoodVision Mini to life by creating a Gradio demo¶

We've decided we'd like to deploy the EffNetB2 model (to begin with, this could always be changed later).

So how can we do that?

There are several ways to deploy a machine learning model each with specific use cases (as discussed above).

We're going to be focused on perhaps the quickest and certainly one of the most fun ways to get a model deployed to the internet.

And that's by using Gradio.

What's Gradio?

The homepage describes it beautifully:

Gradio is the fastest way to demo your machine learning model with a friendly web interface so that anyone can use it, anywhere!

Why create a demo of your models?

Because metrics on the test set look nice but you never really know how your model performs until you use it in the wild.

So let's get deploying!

We'll start by importing Gradio with the common alias gr and if it's not present, we'll install it.

# Import/install Gradio

try:

import gradio as gr

except:

!pip -q install gradio

import gradio as gr

print(f"Gradio version: {gr.__version__}")

Gradio version: 3.1.4

Gradio ready!

Let's turn FoodVision Mini into a demo application.

7.1 Gradio overview¶

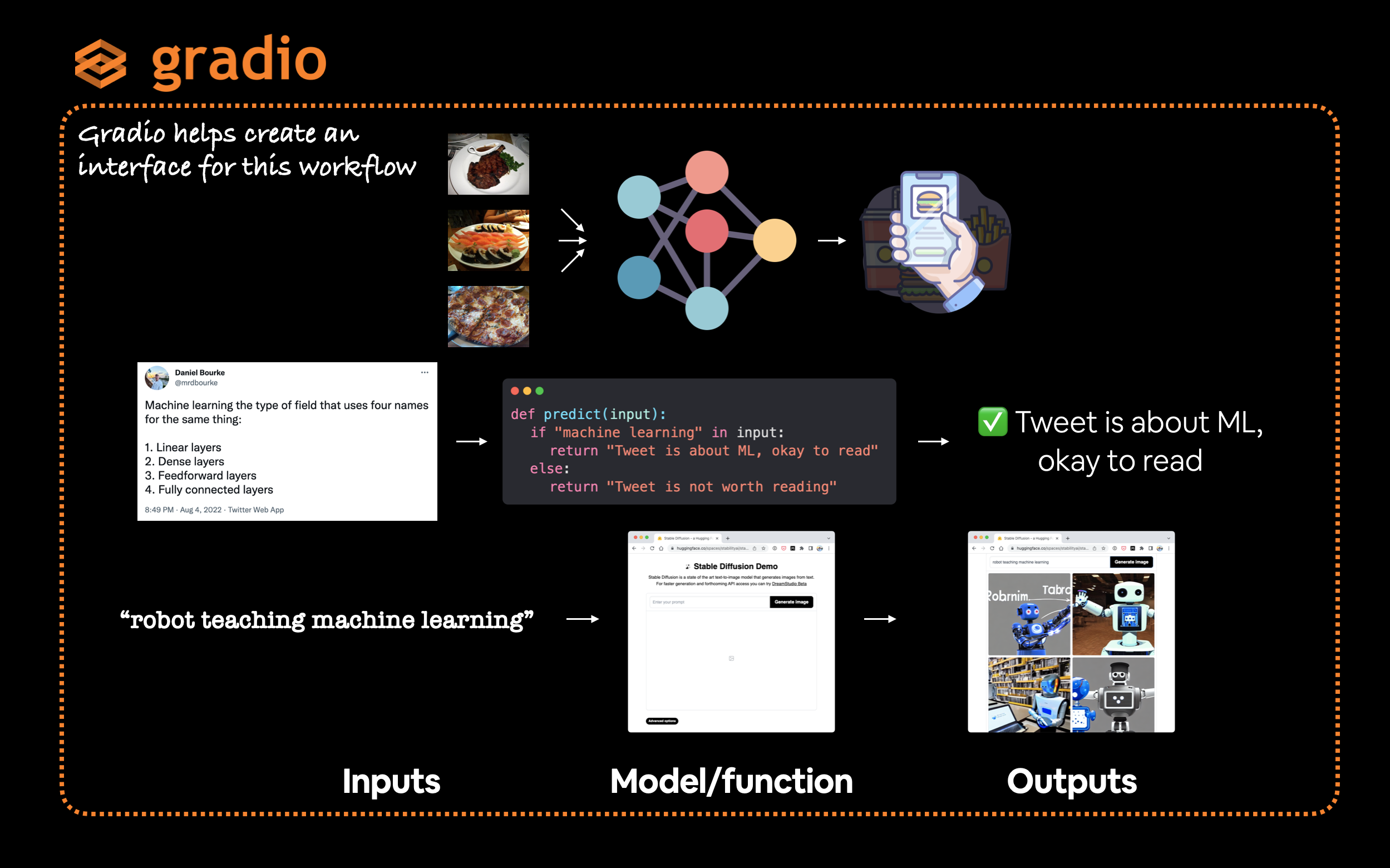

The overall premise of Gradio is very similar to what we've been repeating throughout the course.

What are our inputs and outputs?

And how should we get there?

Well that's what our machine learning model does.

inputs -> ML model -> outputs

In our case, for FoodVision Mini, our inputs are images of food, our ML model is EffNetB2 and our outputs are classes of food (pizza, steak or sushi).

images of food -> EffNetB2 -> outputs

Though the concepts of inputs and outputs can be bridged to almost any other kind of ML problem.

Your inputs and outputs might be any combination of the following:

- Images

- Text

- Video

- Tabular data

- Audio

- Numbers

- & more

And the ML model you build will depend on your inputs and outputs.

Gradio emulates this paradigm by creating an interface (gradio.Interface()) from inputs to outputs.

gradio.Interface(fn, inputs, outputs)

Where, fn is a Python function to map the inputs to the outputs.

Gradio provides a very helpful Interface class to easily create an inputs -> model/function -> outputs workflow where the inputs and outputs could be almost anything you want. For example, you might input Tweets (text) to see if they're about machine learning or not or input a text prompt to generate images.

Note: Gradio has a vast number of possible

inputsandoutputsoptions known as "Components" from images to text to numbers to audio to videos and more. You can see all of these in the Gradio Components documentation.

7.2 Creating a function to map our inputs and outputs¶

To create our FoodVision Mini demo with Gradio, we'll need a function to map our inputs to our outputs.

We created a function earlier called pred_and_store() to make predictions with a given model across a list of target files and store them in a list of dictionaries.

How about we create a similar function but this time focusing on making a prediction on a single image with our EffNetB2 model?

More specifically, we want a function that takes an image as input, preprocesses (transforms) it, makes a prediction with EffNetB2 and then returns the prediction (pred or pred label for short) as well as the prediction probability (pred prob).

And while we're here, let's return the time it took to do so too:

input: image -> transform -> predict with EffNetB2 -> output: pred, pred prob, time taken

This will be our fn parameter for our Gradio interface.

First, let's make sure our EffNetB2 model is on the CPU (since we're sticking with CPU-only predictions, however you could change this if you have access to a GPU).

# Put EffNetB2 on CPU

effnetb2.to("cpu")

# Check the device

next(iter(effnetb2.parameters())).device

device(type='cpu')

And now let's create a function called predict() to replicate the workflow above.

from typing import Tuple, Dict

def predict(img) -> Tuple[Dict, float]:

"""Transforms and performs a prediction on img and returns prediction and time taken.

"""

# Start the timer

start_time = timer()

# Transform the target image and add a batch dimension

img = effnetb2_transforms(img).unsqueeze(0)

# Put model into evaluation mode and turn on inference mode

effnetb2.eval()

with torch.inference_mode():

# Pass the transformed image through the model and turn the prediction logits into prediction probabilities

pred_probs = torch.softmax(effnetb2(img), dim=1)

# Create a prediction label and prediction probability dictionary for each prediction class (this is the required format for Gradio's output parameter)

pred_labels_and_probs = {class_names[i]: float(pred_probs[0][i]) for i in range(len(class_names))}

# Calculate the prediction time

pred_time = round(timer() - start_time, 5)

# Return the prediction dictionary and prediction time

return pred_labels_and_probs, pred_time

Beautiful!

Now let's see our function in action by performing a prediction on a random image from the test dataset.

We'll start by getting a list of all the image paths from the test directory and then randomly selecting one.

Then we'll open the randomly selected image with PIL.Image.open().

Finally, we'll pass the image to our predict() function.

import random

from PIL import Image

# Get a list of all test image filepaths

test_data_paths = list(Path(test_dir).glob("*/*.jpg"))

# Randomly select a test image path

random_image_path = random.sample(test_data_paths, k=1)[0]

# Open the target image

image = Image.open(random_image_path)

print(f"[INFO] Predicting on image at path: {random_image_path}\n")

# Predict on the target image and print out the outputs

pred_dict, pred_time = predict(img=image)

print(f"Prediction label and probability dictionary: \n{pred_dict}")

print(f"Prediction time: {pred_time} seconds")

[INFO] Predicting on image at path: data/pizza_steak_sushi_20_percent/test/pizza/3770514.jpg

Prediction label and probability dictionary:

{'pizza': 0.9785208702087402, 'steak': 0.01169557310640812, 'sushi': 0.009783552028238773}

Prediction time: 0.027 seconds

Nice!

Running the cell above a few times we can see different prediction probabilities for each label from our EffNetB2 model as well as the time it took per prediction.

7.3 Creating a list of example images¶

Our predict() function enables us to go from inputs -> transform -> ML model -> outputs.

Which is exactly what we need for our Graido demo.

But before we create the demo, let's create one more thing: a list of examples.

Gradio's Interface class takes a list of examples of as an optional parameter (gradio.Interface(examples=List[Any])).

And the format for the examples parameter is a list of lists.

So let's create a list of lists containing random filepaths to our test images.

Three examples should be enough.

# Create a list of example inputs to our Gradio demo

example_list = [[str(filepath)] for filepath in random.sample(test_data_paths, k=3)]

example_list

[['data/pizza_steak_sushi_20_percent/test/sushi/804460.jpg'], ['data/pizza_steak_sushi_20_percent/test/steak/746921.jpg'], ['data/pizza_steak_sushi_20_percent/test/steak/2117351.jpg']]

Perfect!

Our Gradio demo will showcase these as example inputs to our demo so people can try it out and see what it does without uploading any of their own data.

7.4 Building a Gradio interface¶

Time to put everything together and bring our FoodVision Mini demo to life!

Let's create a Gradio interface to replicate the workflow:

input: image -> transform -> predict with EffNetB2 -> output: pred, pred prob, time taken

We can do with the gradio.Interface() class with the following parameters:

fn- a Python function to mapinputstooutputs, in our case, we'll use ourpredict()function.inputs- the input to our interface, such as an image usinggradio.Image()or"image".outputs- the output of our interface once theinputshave gone through thefn, such as a label usinggradio.Label()(for our model's predicted labels) or number usinggradio.Number()(for our model's prediction time).- Note: Gradio comes with many in-built

inputsandoutputsoptions known as "Components".

- Note: Gradio comes with many in-built

examples- a list of examples to showcase for the demo.title- a string title of the demo.description- a string description of the demo.article- a reference note at the bottom of the demo.

Once we've created our demo instance of gr.Interface(), we can bring it to life using gradio.Interface().launch() or demo.launch() command.

Easy!

import gradio as gr

# Create title, description and article strings

title = "FoodVision Mini 🍕🥩🍣"

description = "An EfficientNetB2 feature extractor computer vision model to classify images of food as pizza, steak or sushi."

article = "Created at [09. PyTorch Model Deployment](https://www.learnpytorch.io/09_pytorch_model_deployment/)."

# Create the Gradio demo

demo = gr.Interface(fn=predict, # mapping function from input to output

inputs=gr.Image(type="pil"), # what are the inputs?

outputs=[gr.Label(num_top_classes=3, label="Predictions"), # what are the outputs?

gr.Number(label="Prediction time (s)")], # our fn has two outputs, therefore we have two outputs

examples=example_list,

title=title,

description=description,

article=article)

# Launch the demo!

demo.launch(debug=False, # print errors locally?

share=True) # generate a publically shareable URL?

Running on local URL: http://127.0.0.1:7860/ Running on public URL: https://27541.gradio.app This share link expires in 72 hours. For free permanent hosting, check out Spaces: https://huggingface.co/spaces

(<gradio.routes.App at 0x7f122dd0f0d0>, 'http://127.0.0.1:7860/', 'https://27541.gradio.app')

FoodVision Mini Gradio demo running in Google Colab and in the browser (the link when running from Google Colab only lasts for 72 hours). You can see the permanent live demo on Hugging Face Spaces.

Woohoo!!! What an epic demo!!!

FoodVision Mini has officially come to life in an interface someone could use and try out.

If you set the parameter share=True in the launch() method, Gradio also provides you with a shareable link such as https://123XYZ.gradio.app (this link is an example only and likely expired) which is valid for 72-hours.

The link provides a proxy back to the Gradio interface you launched.

For more permanent hosting, you can upload your Gradio app to Hugging Face Spaces or anywhere that runs Python code.

8. Turning our FoodVision Mini Gradio Demo into a deployable app¶

We've seen our FoodVision Mini model come to life through a Gradio demo.

But what if we wanted to share it with our friends?

Well, we could use the provided Gradio link, however, the shared link only lasts for 72-hours.

To make our FoodVision Mini demo more permanent, we can package it into an app and upload it to Hugging Face Spaces.

8.1 What is Hugging Face Spaces?¶

Hugging Face Spaces is a resource that allows you to host and share machine learning apps.

Building a demo is one of the best ways to showcase and test what you've done.

And Spaces allows you to do just that.

You can think of Hugging Face as the GitHub of machine learning.

If having a good GitHub portfolio showcases your coding abilities, having a good Hugging Face portfolio can showcase your machine learning abilities.

Note: There are many other places we could upload and host our Gradio app such as, Google Cloud, AWS (Amazon Web Services) or other cloud vendors, however, we're going to use Hugging Face Spaces due to the ease of use and wide adoption by the machine learning community.

8.2 Deployed Gradio app structure¶

To upload our demo Gradio app, we'll want to put everything relating to it into a single directory.

For example, our demo might live at the path demos/foodvision_mini/ with the file structure:

demos/

└── foodvision_mini/

├── 09_pretrained_effnetb2_feature_extractor_pizza_steak_sushi_20_percent.pth

├── app.py

├── examples/

│ ├── example_1.jpg

│ ├── example_2.jpg

│ └── example_3.jpg

├── model.py

└── requirements.txt

Where:

09_pretrained_effnetb2_feature_extractor_pizza_steak_sushi_20_percent.pthis our trained PyTorch model file.app.pycontains our Gradio app (similar to the code that launched the app).- Note: